publications

More details about my publications as well as pre-prints of recent papers can be found in my Research Gate page, Google Scholar, and DBLP.

2025

-

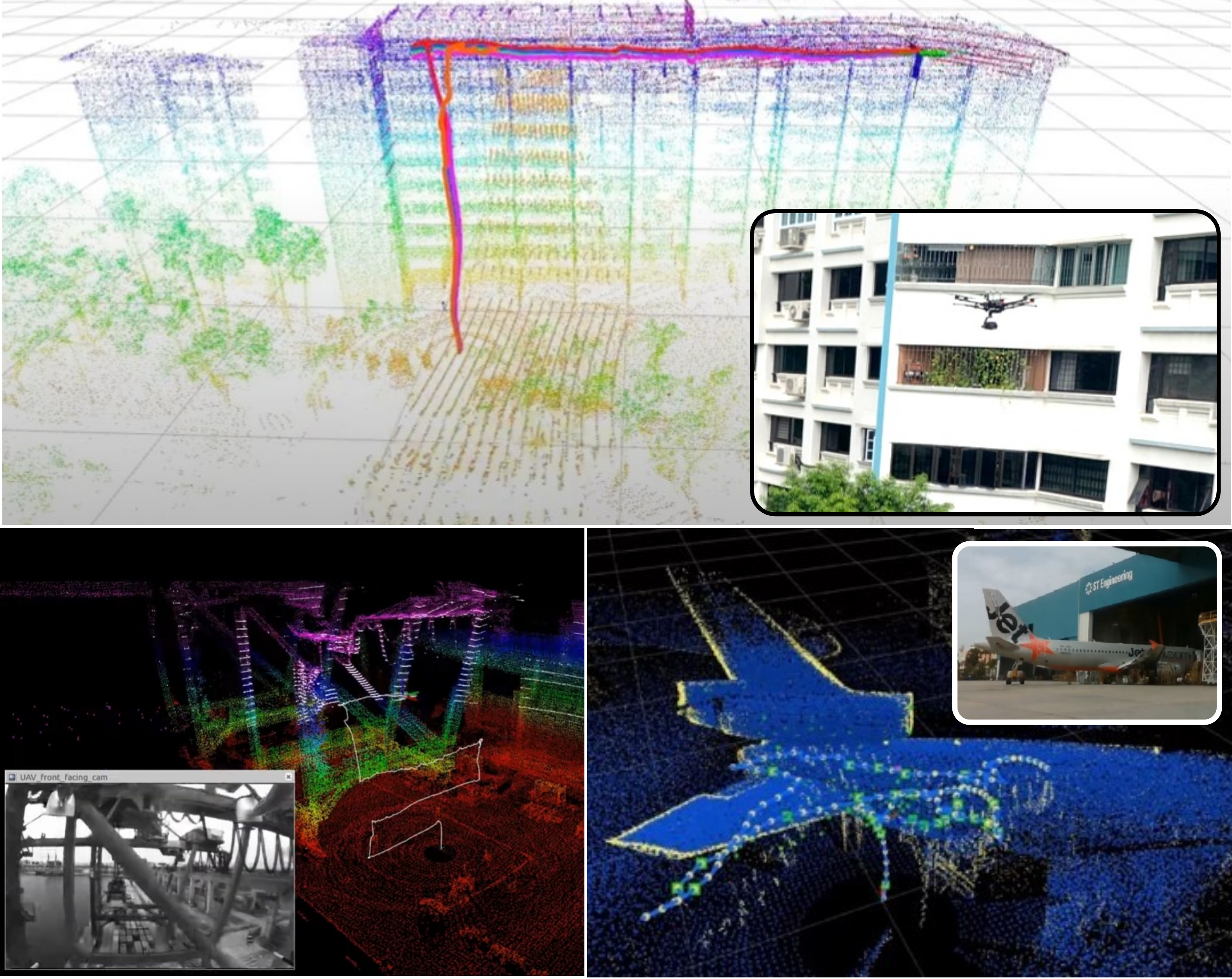

Cooperative Aerial Robot Inspection Challenge: A Benchmark for Heterogeneous Multi-Uncrewed-Aerial-Vehicle Planning and Lessons LearnedMuqing Cao, Thien-Minh Nguyen, Shenghai Yuan, Andreas Anastasiou, Angelos Zacharia, Savvas Papaioannou, and 9 more authorsIEEE Robotics and Automation Magazine, 2025

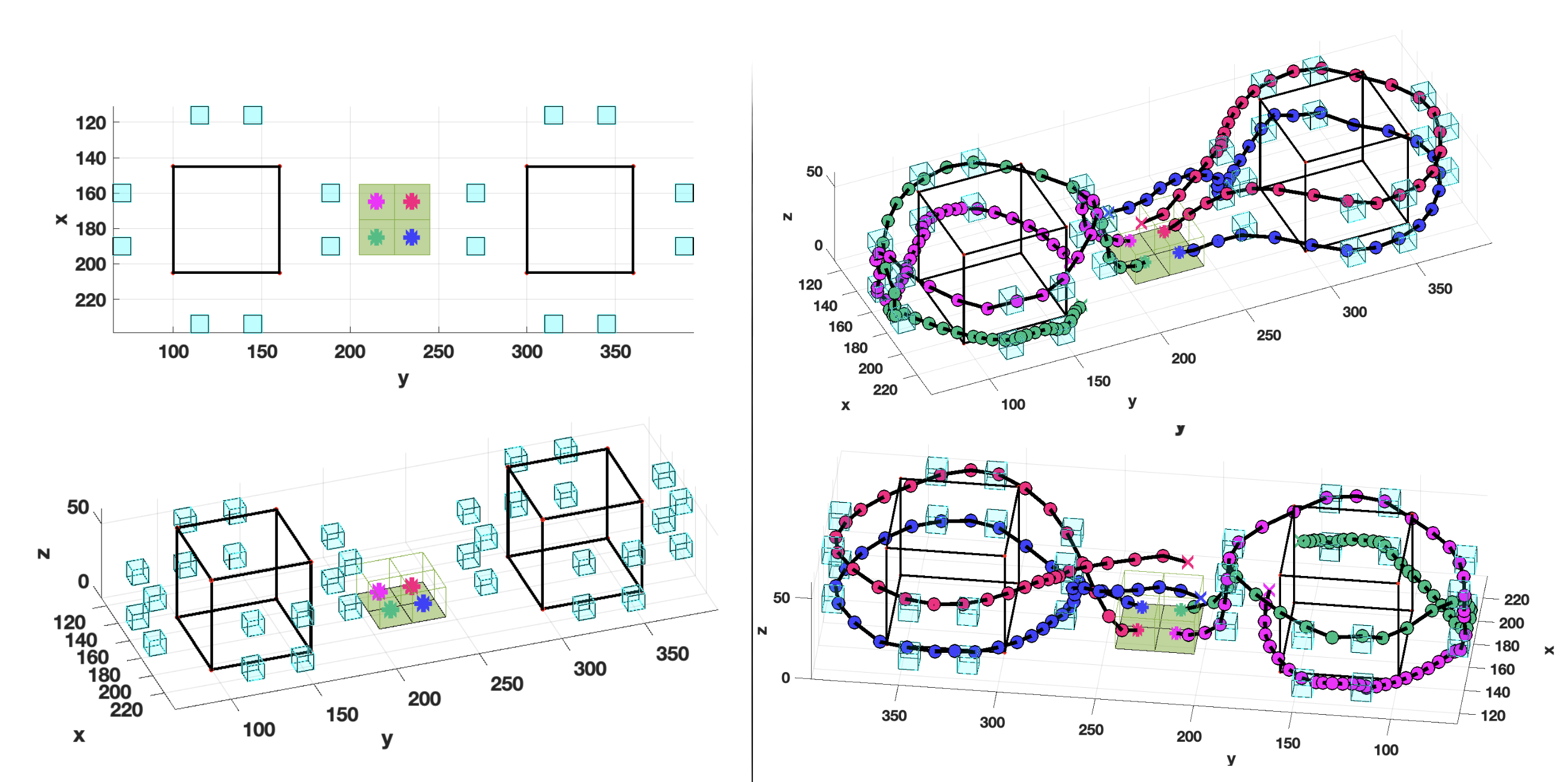

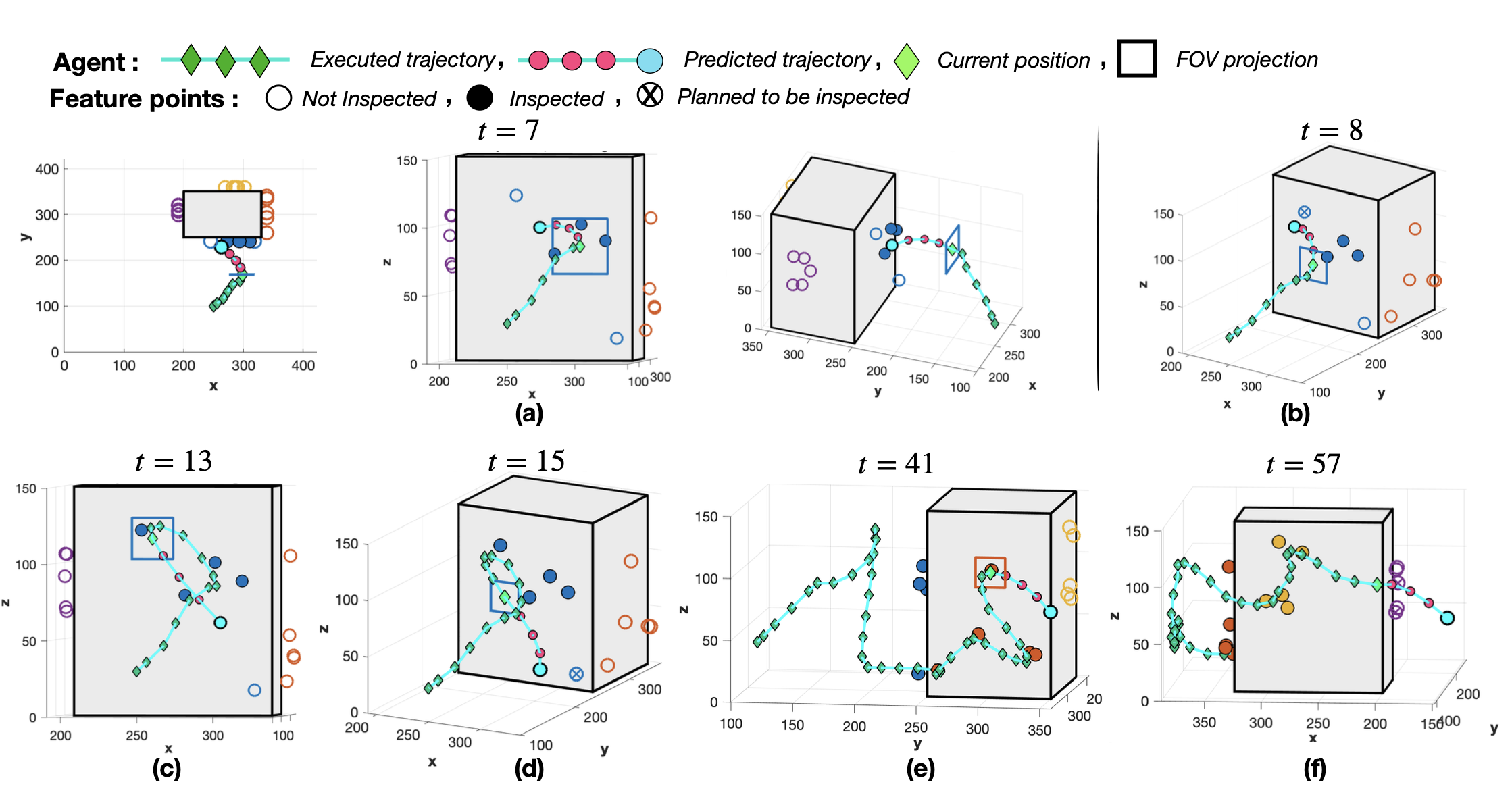

Cooperative Aerial Robot Inspection Challenge: A Benchmark for Heterogeneous Multi-Uncrewed-Aerial-Vehicle Planning and Lessons LearnedMuqing Cao, Thien-Minh Nguyen, Shenghai Yuan, Andreas Anastasiou, Angelos Zacharia, Savvas Papaioannou, and 9 more authorsIEEE Robotics and Automation Magazine, 2025We propose the Cooperative Aerial Robot Inspection Challenge (CARIC), a simulation-based benchmark for motion planning algorithms in heterogeneous multi-uncrewed-aerial-vehicle (UAV) systems. CARIC features UAV teams with complementary sensors, realistic constraints, and evaluation metrics prioritizing inspection quality and efficiency. It offers a ready-to-use perception-control software stack and diverse scenarios to support the development and evaluation of task allocation and motion planning algorithms. Competitions using CARIC were held at the 2023 IEEE Conference on Decision and Control (CDC) and the IROS 2024 Workshop on Multi-Robot Perception and Navigation, attracting innovative solutions from research teams worldwide. This article examines the top three teams from CDC 2023, analyzing their exploration, inspection, and task allocation strategies while drawing insights into their performance across scenarios. The results highlight the task’s complexity and suggest promising research directions in cooperative multi-UAV systems. The simulation framework, including the source code and detailed instructions, is publicly available at https://ntu-aris.github.io/caric.

@article{RAM2025, title = {Cooperative Aerial Robot Inspection Challenge: A Benchmark for Heterogeneous Multi-Uncrewed-Aerial-Vehicle Planning and Lessons Learned}, author = {Cao, Muqing and Nguyen, Thien-Minh and Yuan, Shenghai and Anastasiou, Andreas and Zacharia, Angelos and Papaioannou, Savvas and Kolios, Panayiotis and Panayiotou, Christos G. and Polycarpou, Marios M. and Xu, Xinhang and Zhang, Mingjie and Gao, Fei and Zhou, Boyu and Chen, Ben M. and Xie, Lihua}, journal = {IEEE Robotics and Automation Magazine}, volume = {}, number = {}, pages = {2-13}, year = {2025}, issn = {1558-223X}, doi = {10.1109/MRA.2025.3584341}, } -

Rolling horizon coverage control with collaborative autonomous agentsSavvas Papaioannou, Panayiotis Kolios, Theocharis Theocharides, Christos G Panayiotou, and Marios M PolycarpouPhilosophical Transactions of the Royal Society A: Mathematical, Physical and Engineering Sciences, 2025

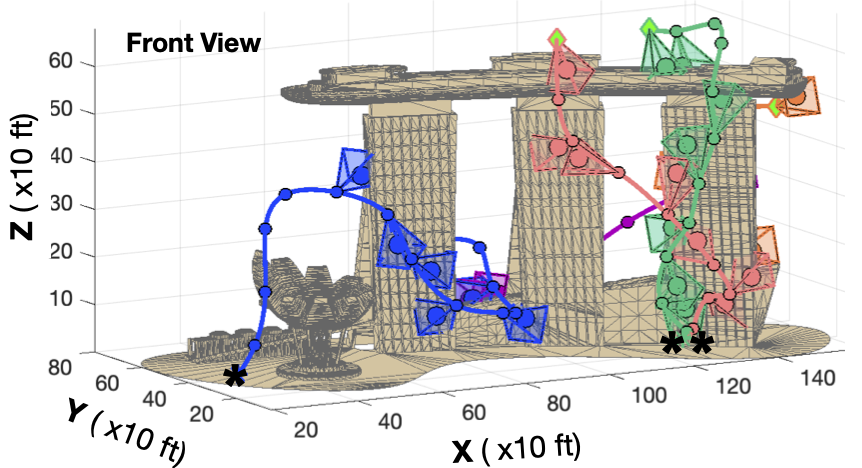

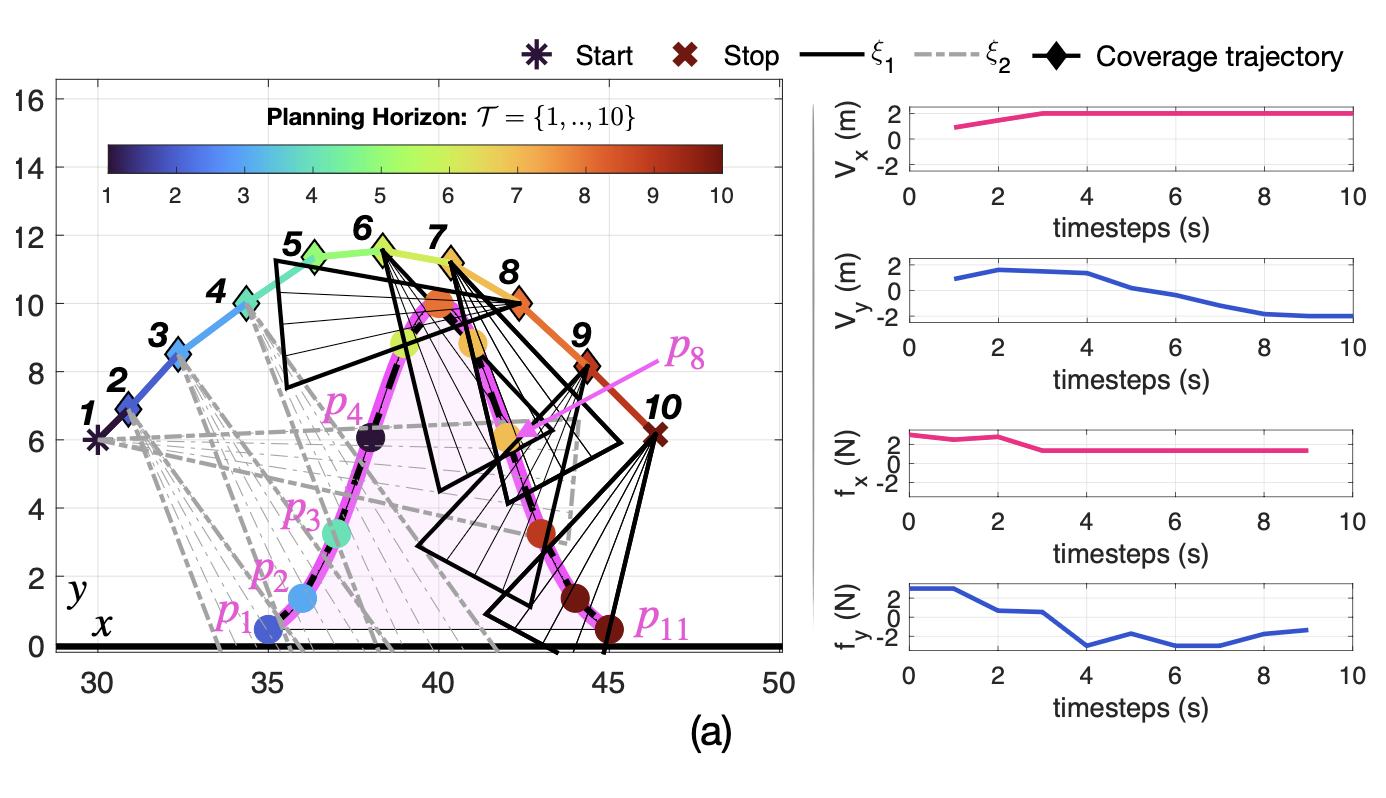

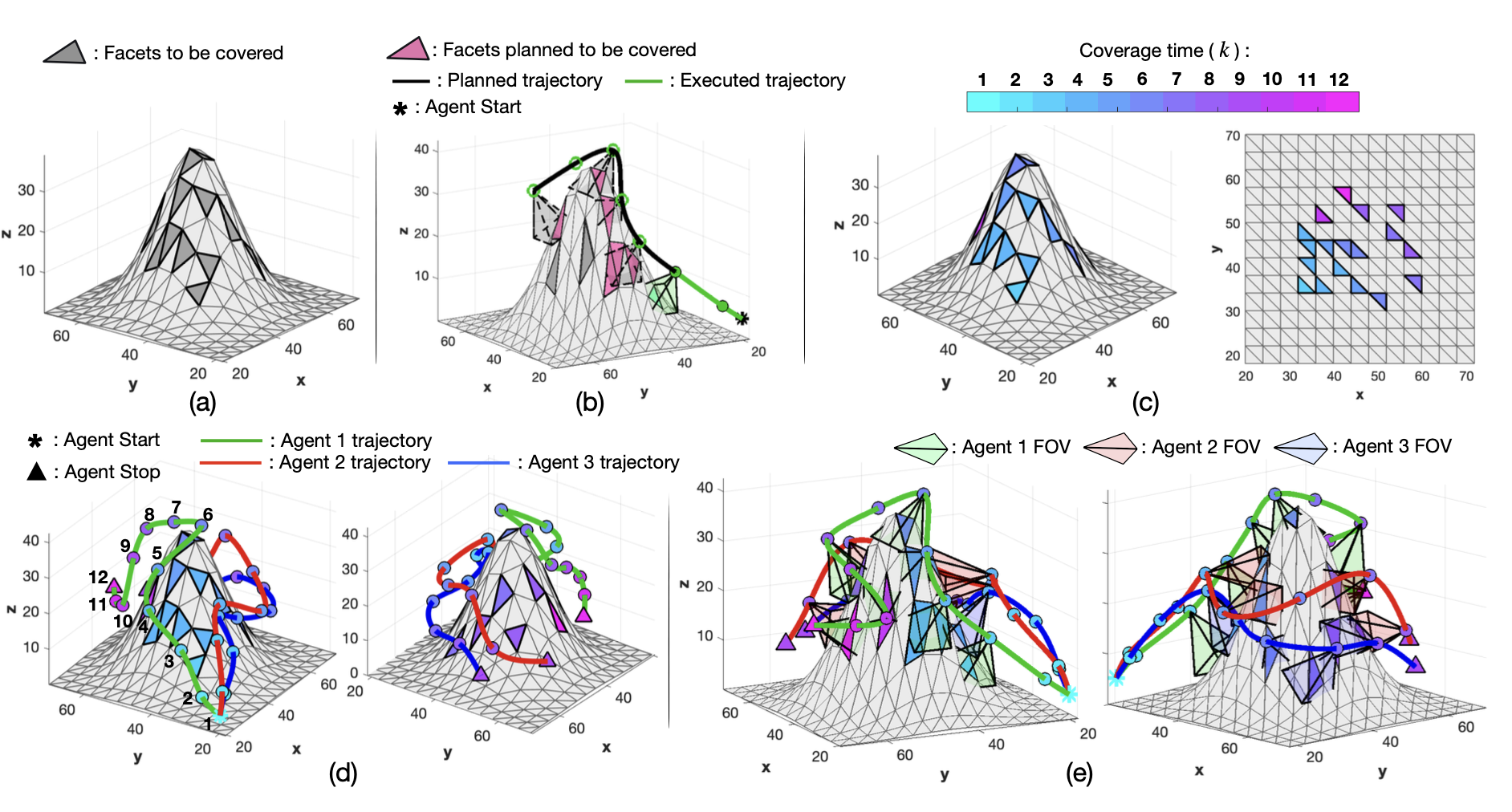

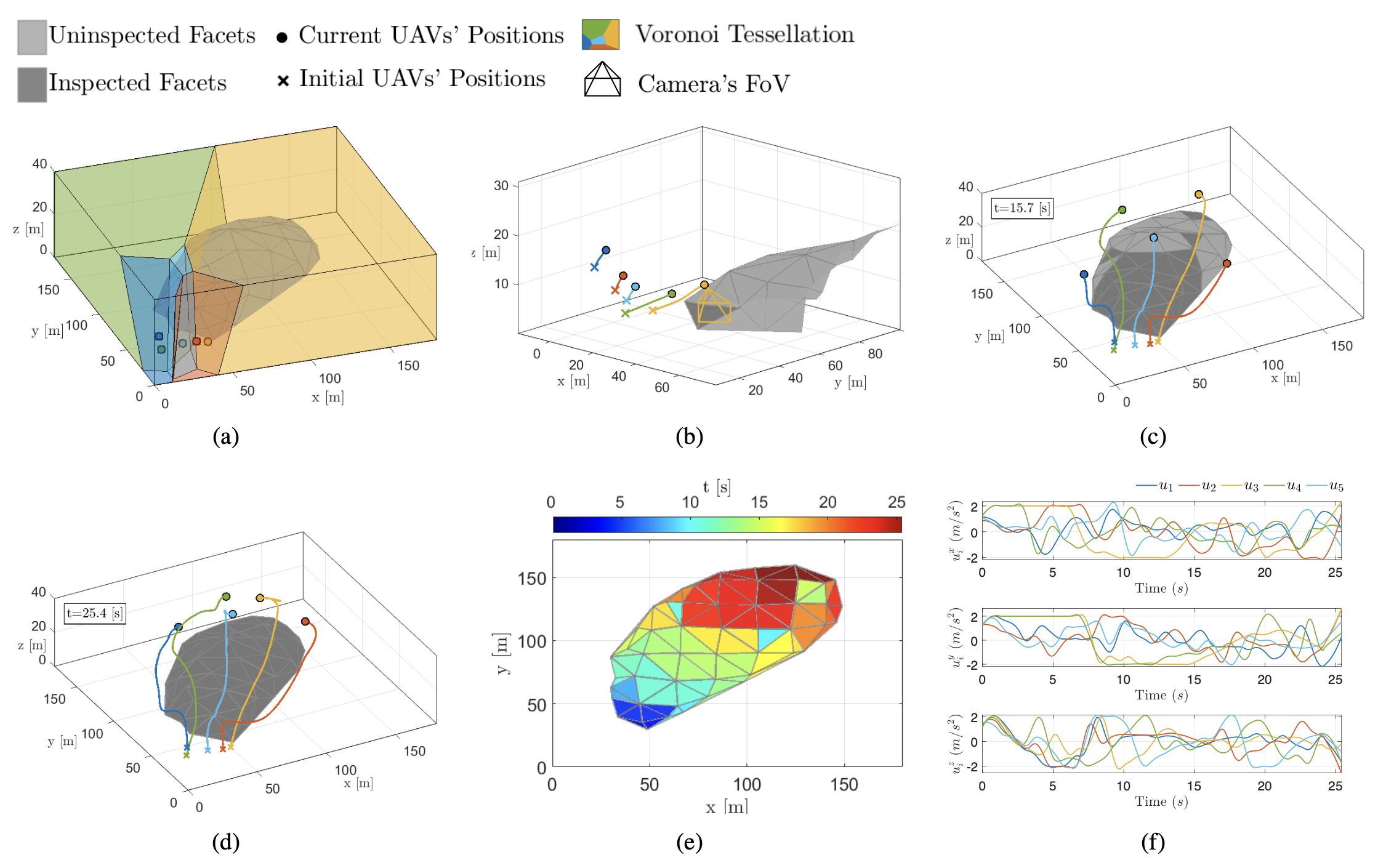

Rolling horizon coverage control with collaborative autonomous agentsSavvas Papaioannou, Panayiotis Kolios, Theocharis Theocharides, Christos G Panayiotou, and Marios M PolycarpouPhilosophical Transactions of the Royal Society A: Mathematical, Physical and Engineering Sciences, 2025This work proposes a coverage controller that enables an aerial team of distributed autonomous agents to collaboratively generate non-myopic coverage plans over a rolling finite horizon, aiming to cover specific points on the surface area of a three-dimensional object of interest. The collaborative coverage problem, formulated as a distributed model predictive control problem, optimizes the agents’ motion and camera control inputs, while considering inter-agent constraints aiming at reducing work redundancy. The proposed coverage controller integrates constraints based on light-path propagation techniques to predict the parts of the object’s surface that are visible with regard to the agents’ future anticipated states. This work also demonstrates how complex, non-linear visibility assessment constraints can be converted into logical expressions that are embedded as binary constraints into a mixed-integer optimization framework. The proposed approach has been demonstrated through simulations and practical applications for inspecting buildings with unmanned aerial vehicles (UAVs).

@article{papaioannou2025rolling, title = {Rolling horizon coverage control with collaborative autonomous agents}, author = {Papaioannou, Savvas and Kolios, Panayiotis and Theocharides, Theocharis and Panayiotou, Christos G and Polycarpou, Marios M}, journal = {Philosophical Transactions of the Royal Society A: Mathematical, Physical and Engineering Sciences}, volume = {383}, number = {2289}, year = {2025}, publisher = {The Royal Society}, doi = {10.1098/rsta.2024.0146}, } -

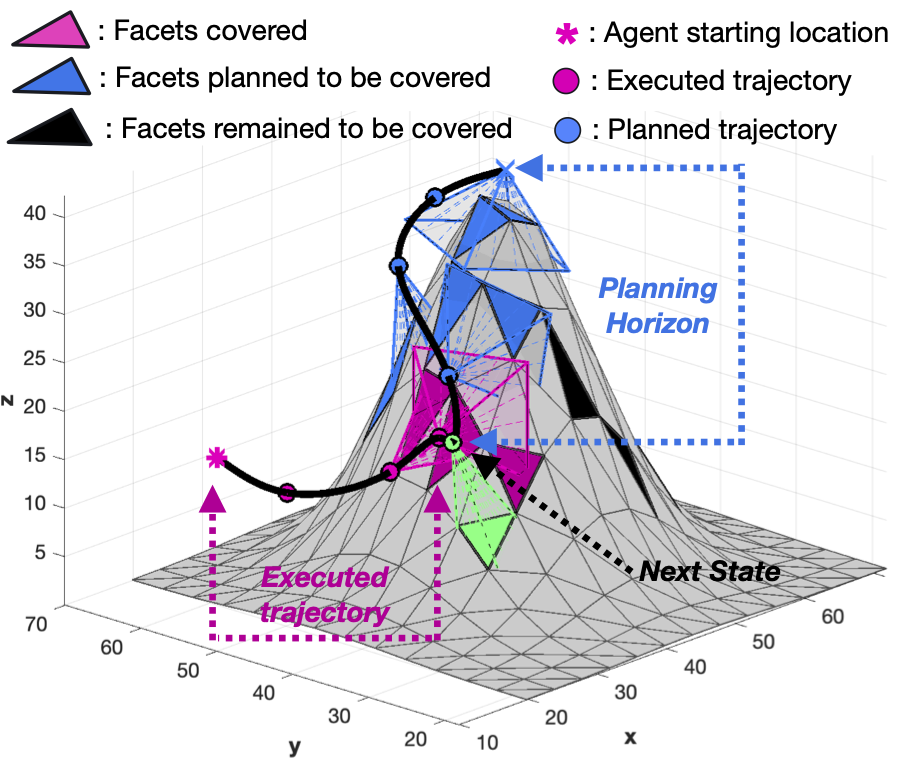

Jointly-optimized Trajectory Generation and Camera Control for 3D Coverage PlanningSavvas Papaioannou, Panayiotis Kolios, Theocharis Theocharides, Christos G. Panayiotou, and Marios M. PolycarpouIEEE Transactions on Mobile Computing, 2025

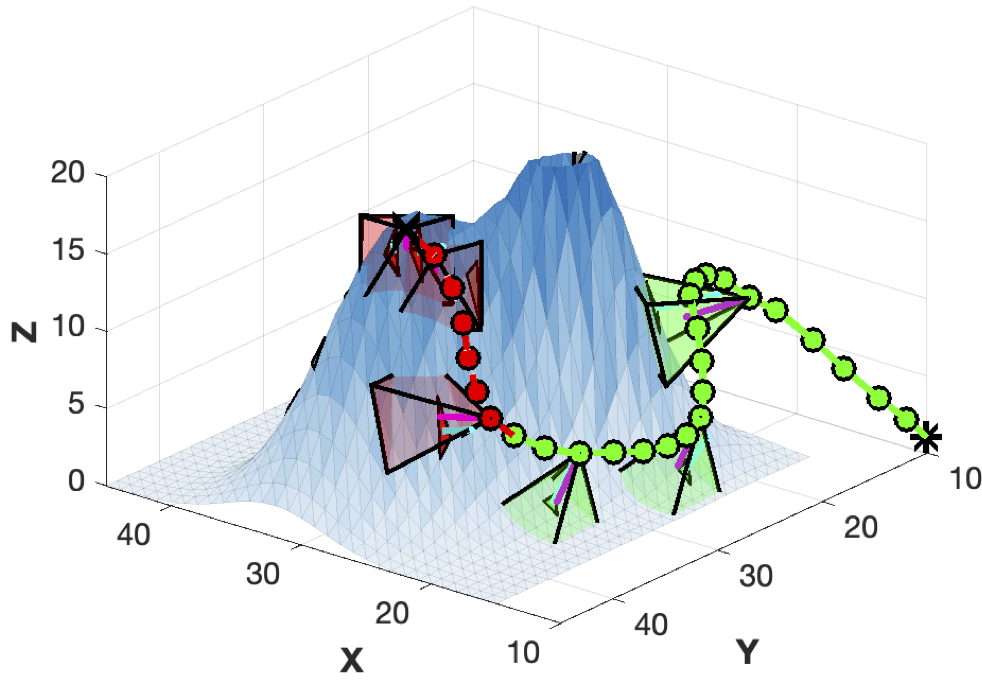

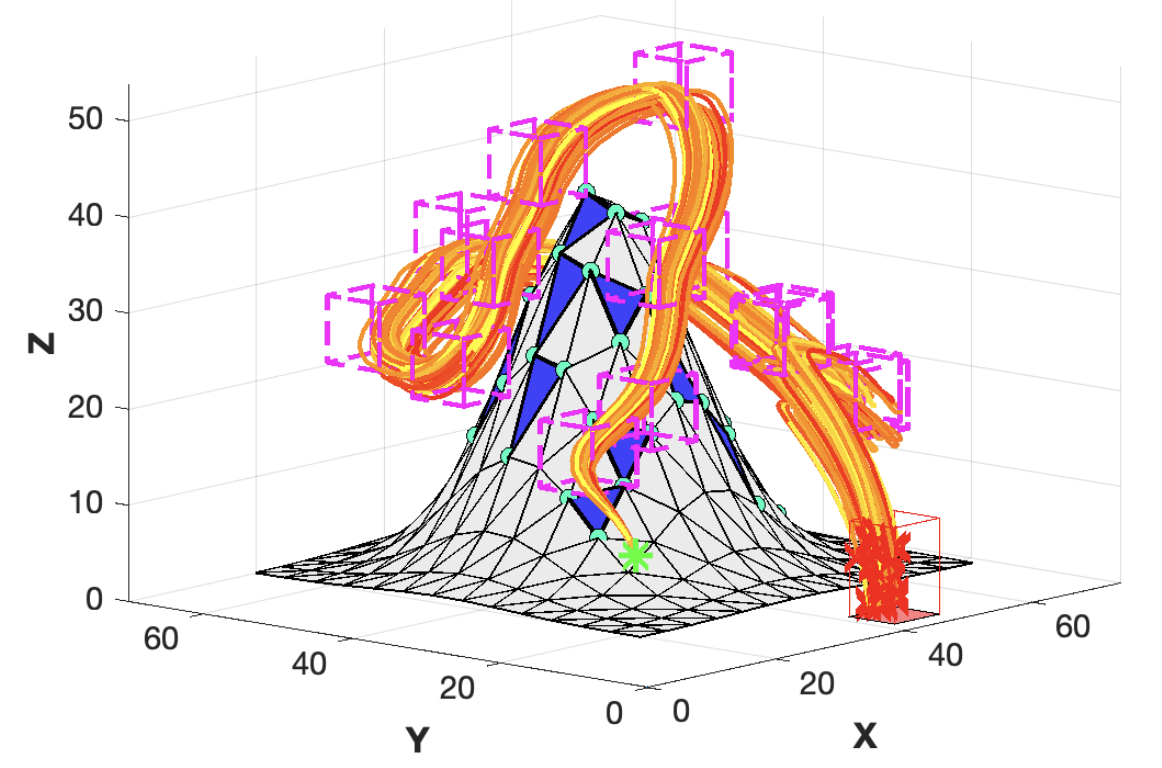

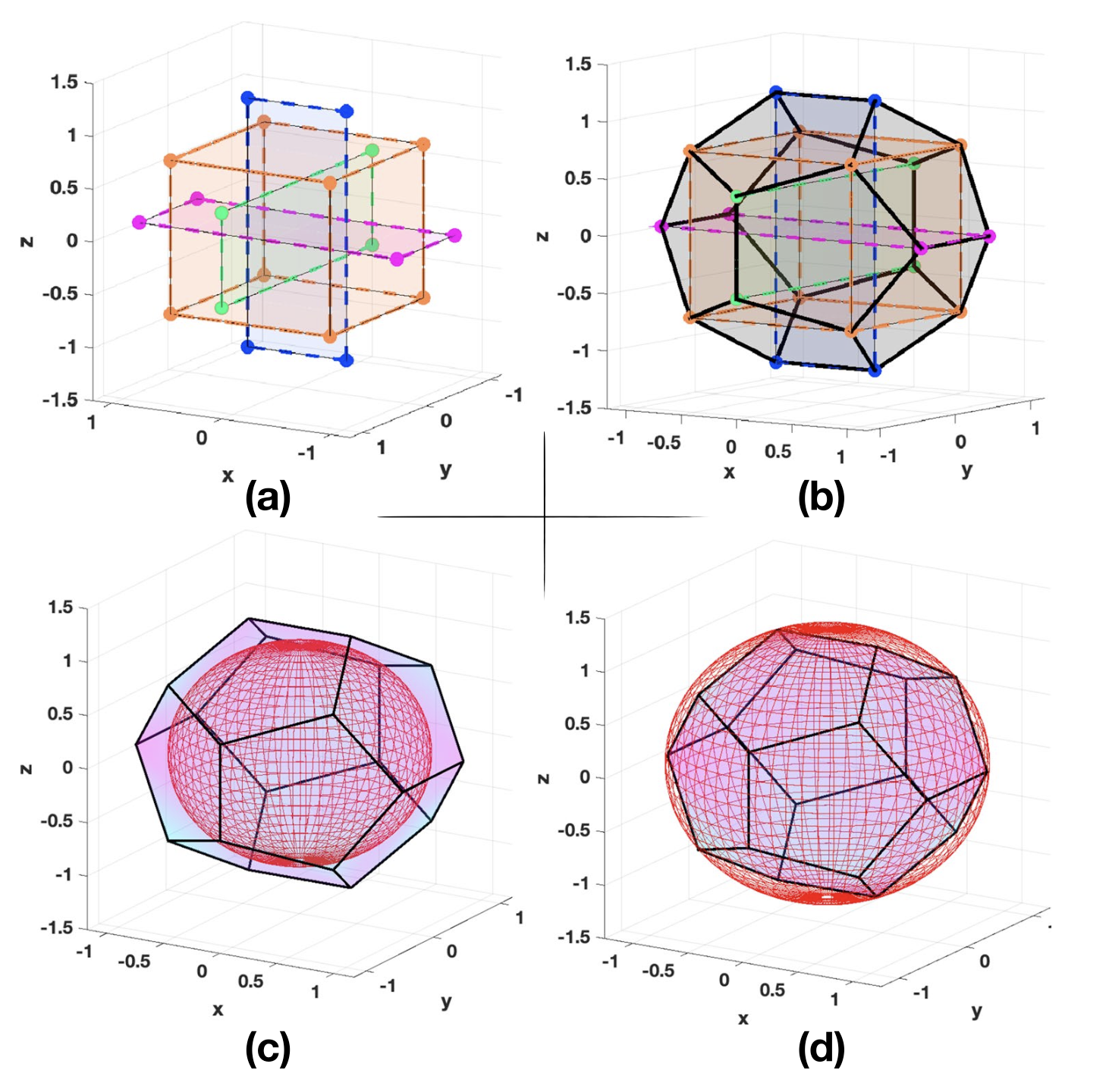

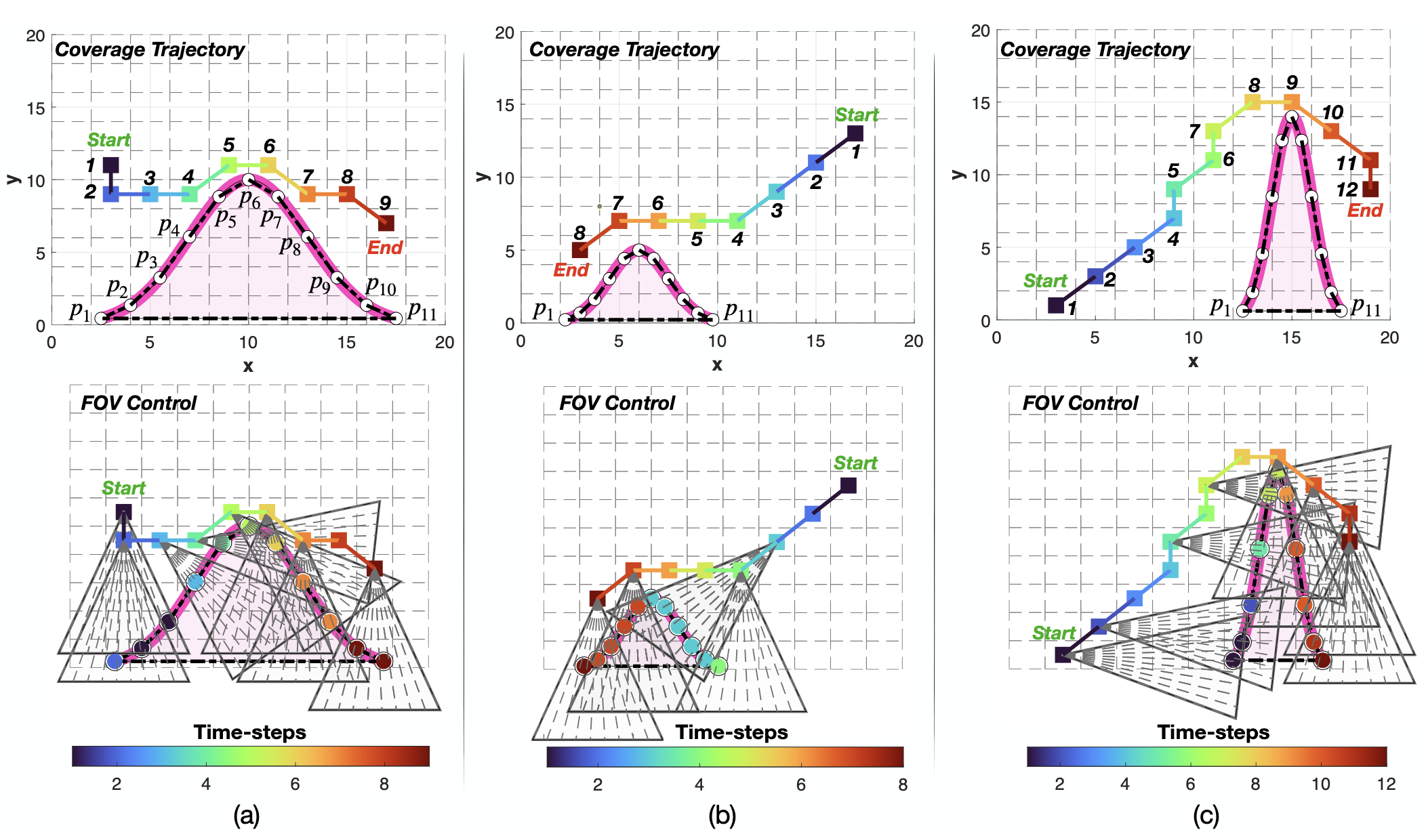

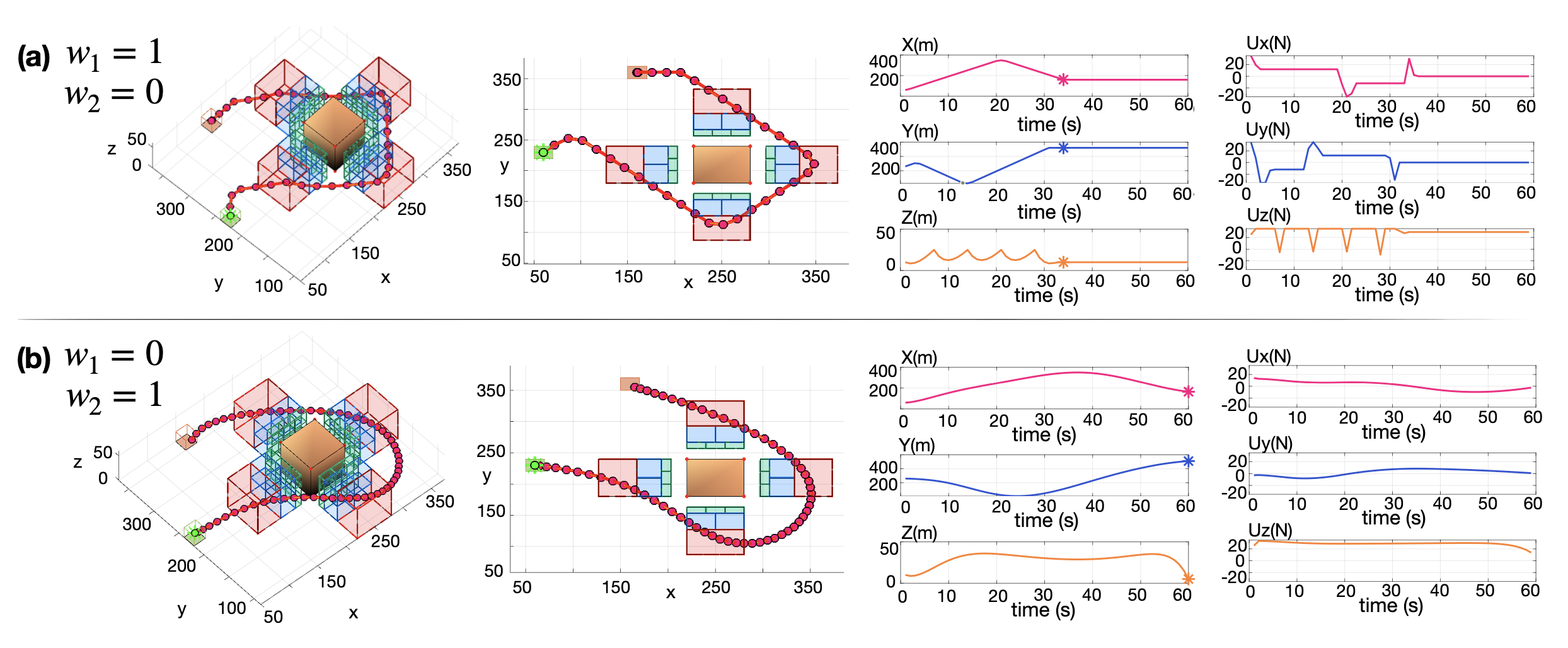

Jointly-optimized Trajectory Generation and Camera Control for 3D Coverage PlanningSavvas Papaioannou, Panayiotis Kolios, Theocharis Theocharides, Christos G. Panayiotou, and Marios M. PolycarpouIEEE Transactions on Mobile Computing, 2025This work proposes a jointly-optimized trajectory generation and camera control approach which allows an autonomous UAV agent, operating in 3D environments, to plan and execute coverage trajectories that maximally cover the surface area of a 3D object of interest. More specifically, the UAV’s kinematic and camera control inputs are jointly-optimized over a rolling finite planning horizon for the complete 3D coverage of the object of interest. The proposed controller integrates ray-tracing into the planning process in order to simulate the propagation of light-rays and thus determine the visible parts of the object through the UAV’s camera. Subsequently, this enables the generation of accurate look-ahead coverage trajectories. The coverage planning problem is formulated in this work as a rolling finite horizon optimal control problem, and solved with mixed integer programming techniques. Extensive real-world and synthetic experiments demonstrate the performance of the proposed approach.

@article{9894722, author = {Papaioannou, Savvas and Kolios, Panayiotis and Theocharides, Theocharis and Panayiotou, Christos G. and Polycarpou, Marios M.}, journal = {IEEE Transactions on Mobile Computing}, title = {Jointly-optimized Trajectory Generation and Camera Control for 3D Coverage Planning}, year = {2025}, volume = {24}, number = {8}, pages = {7519-7537}, doi = {10.1109/TMC.2025.3551362}, } -

Adaptive Monitoring of Stochastic Fire Front Processes via Information-seeking Predictive ControlSavvas Papaioannou, Panayiotis Kolios, Christos G. Panayiotou, and Marios M. PolycarpouIn 2025 IEEE 64th Conference on Decision and Control (CDC), 2025

Adaptive Monitoring of Stochastic Fire Front Processes via Information-seeking Predictive ControlSavvas Papaioannou, Panayiotis Kolios, Christos G. Panayiotou, and Marios M. PolycarpouIn 2025 IEEE 64th Conference on Decision and Control (CDC), 2025We consider the problem of adaptively monitoring a wildfire front using a mobile agent (e.g., a drone), whose trajectory determines where sensor data is collected and thus influences the accuracy of fire propagation estimation. This is a challenging problem, as the stochastic nature of wildfire evolution requires the seamless integration of sensing, estimation, and control, often treated separately in existing methods. State-of-the-art methods either impose linear-Gaussian assumptions to establish optimality or rely on approximations and heuristics, often without providing explicit performance guarantees. To address these limitations, we formulate the fire front monitoring task as a stochastic optimal control problem that integrates sensing, estimation, and control. We derive an optimal recursive Bayesian estimator for a class of stochastic nonlinear elliptical-growth fire front models. Subsequently, we transform the resulting nonlinear stochastic control problem into a finite-horizon Markov decision process and design an information-seeking predictive control law obtained via a lower confidence bound-based adaptive search algorithm with asymptotic convergence to the optimal policy.

@inproceedings{PapaioannouCDC2025, author = {Papaioannou, Savvas and Kolios, Panayiotis and Panayiotou, Christos G. and Polycarpou, Marios M.}, booktitle = {2025 IEEE 64th Conference on Decision and Control (CDC)}, title = {Adaptive Monitoring of Stochastic Fire Front Processes via Information-seeking Predictive Control}, year = {2025}, volume = {}, number = {}, pages = {1875-1880}, doi = {10.1109/CDC57313.2025.11313014} } -

VLM-RRT: Vision Language Model Guided RRT Search for Autonomous UAV NavigationJianlin Ye, Savvas Papaioannou, and Panayiotis KoliosIn 2025 International Conference on Unmanned Aircraft Systems (ICUAS), 2025

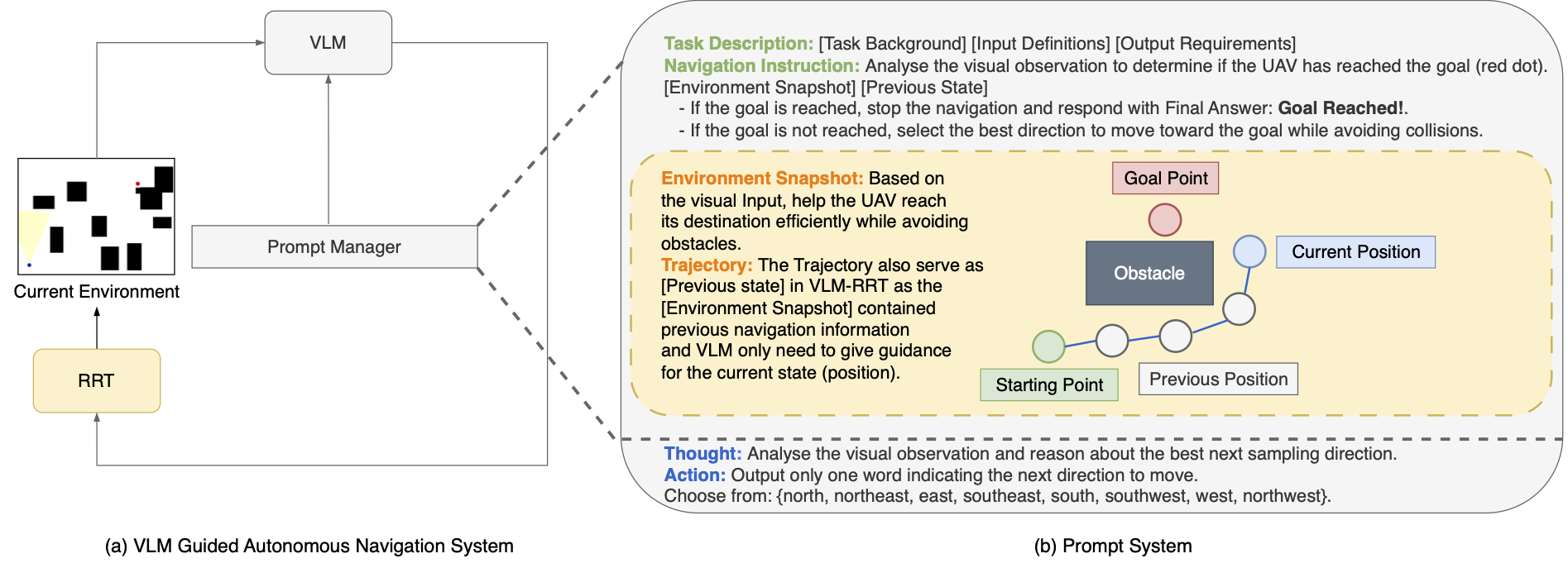

VLM-RRT: Vision Language Model Guided RRT Search for Autonomous UAV NavigationJianlin Ye, Savvas Papaioannou, and Panayiotis KoliosIn 2025 International Conference on Unmanned Aircraft Systems (ICUAS), 2025Path planning is a fundamental capability of autonomous Unmanned Aerial Vehicles (UAVs), enabling them to efficiently navigate toward a target region or explore complex environments while avoiding obstacles. Traditional path-planning methods, such as Rapidly-exploring Random Trees (RRT), have proven effective but often encounter significant challenges. These include high search space complexity, suboptimal path quality, and slow convergence, issues that are particularly problematic in high-stakes applications like disaster response, where rapid and efficient planning is critical. To address these limitations and enhance path-planning efficiency, we propose Vision Language Model RRT (VLM-RRT), a hybrid approach that integrates the pattern recognition capabilities of Vision Language Models (VLMs) with the path-planning strengths of RRT. By leveraging VLMs to provide initial directional guidance based on environmental snapshots, our method biases sampling toward regions more likely to contain feasible paths, significantly improving sampling efficiency and path quality. Extensive quantitative and qualitative experiments with various state-of-the-art VLMs demonstrate the effectiveness of this proposed approach.

@inproceedings{JianlinICUAS25, author = {Ye, Jianlin and Papaioannou, Savvas and Kolios, Panayiotis}, booktitle = {2025 International Conference on Unmanned Aircraft Systems (ICUAS)}, title = {VLM-RRT: Vision Language Model Guided RRT Search for Autonomous UAV Navigation}, year = {2025}, volume = {}, number = {}, pages = {633-640}, doi = {10.1109/ICUAS65942.2025.11007837}, } -

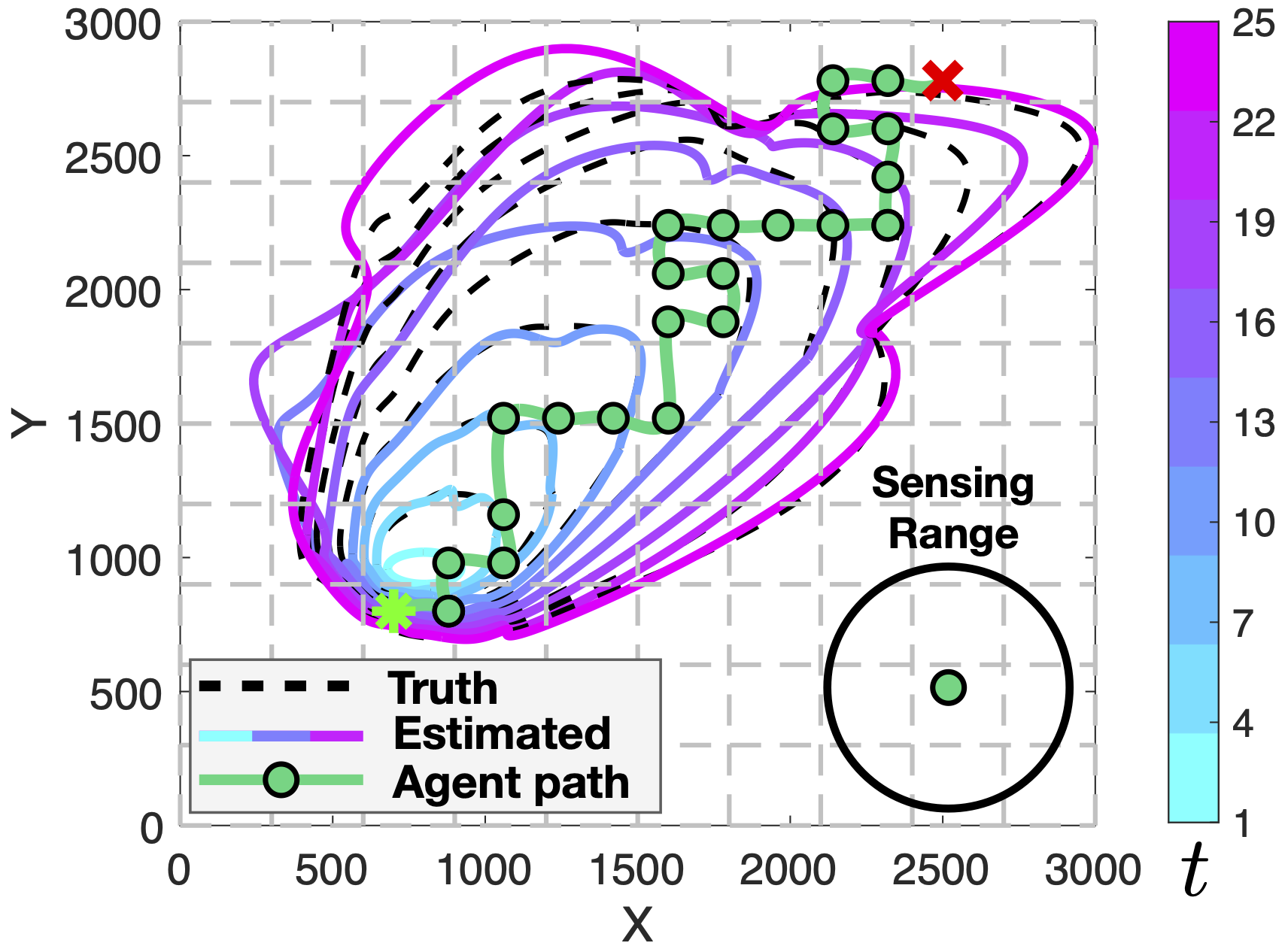

Multiple Target Tracking Using a UAV Swarm in Maritime EnvironmentsAndreas Anastasiou, Savvas Papaioannou, Panayiotis Kolios, and Christos G. PanayiotouIn 2025 European Control Conference (ECC), 2025

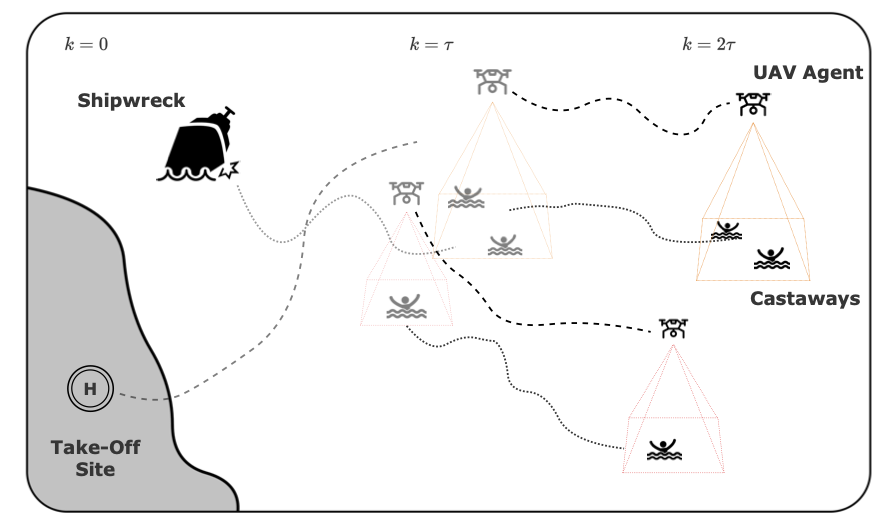

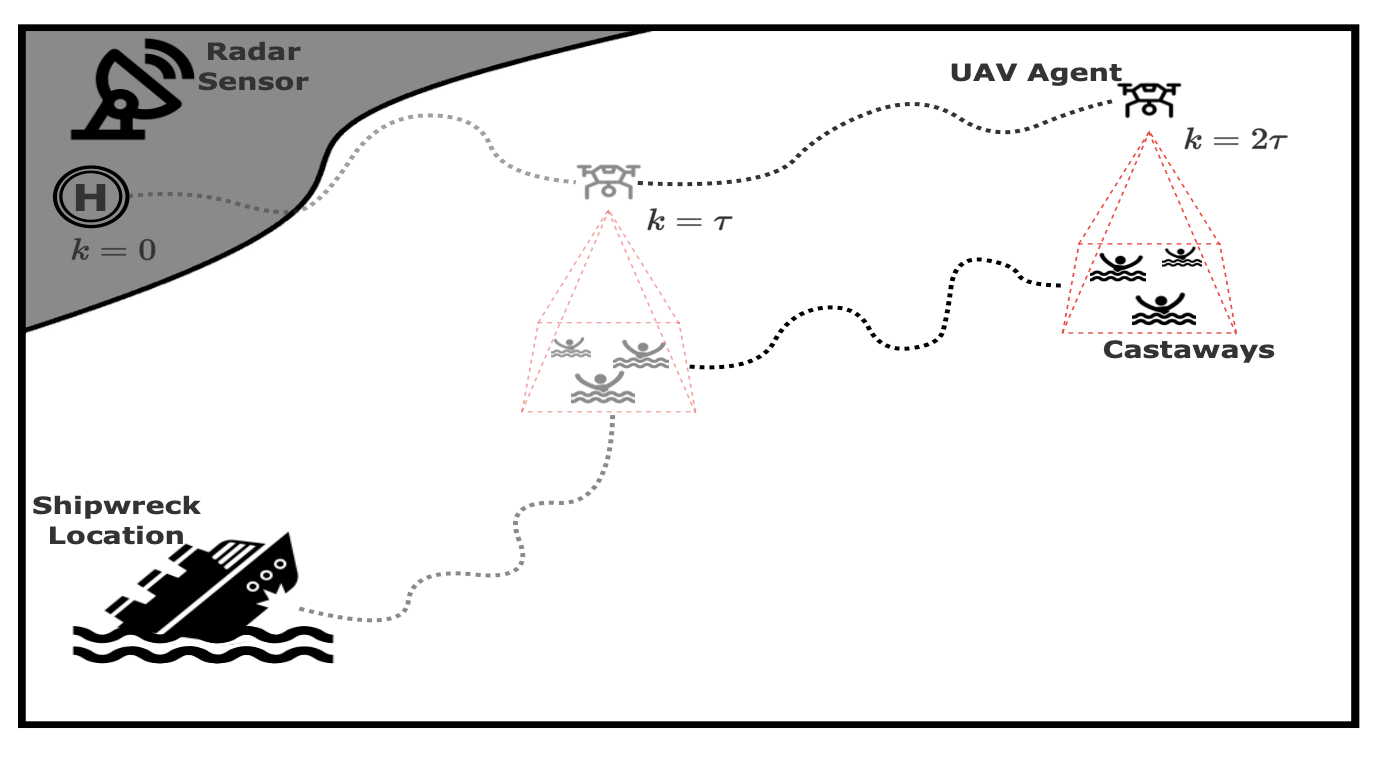

Multiple Target Tracking Using a UAV Swarm in Maritime EnvironmentsAndreas Anastasiou, Savvas Papaioannou, Panayiotis Kolios, and Christos G. PanayiotouIn 2025 European Control Conference (ECC), 2025Nowadays, unmanned aerial vehicles (UAVs) are increasingly utilized in search and rescue missions, a trend driven by technological advancements, including enhancements in automation, avionics, and the reduced cost of electronics. In this work, we introduce a collaborative model predictive control (MPC) framework aimed at addressing the joint problem of guidance and state estimation for tracking multiple castaway targets with a fleet of autonomous UAV agents. We assume that each UAV agent is equipped with a camera sensor, which has a limited sensing range and is utilized for receiving noisy observations from multiple moving castaways adrift in maritime conditions. We derive a nonlinear mixed integer programming (NMIP) -based controller that facilitates the guidance of the UAVs by generating non-myopic trajectories within a receding planning horizon. These trajectories are designed to minimize the tracking error across multiple targets by directing the UAV fleet to locations expected to yield targets measurements, thereby minimizing the uncertainty of the estimated target states. Extensive simulation experiments validate the effectiveness of our proposed method in tracking multiple castaways in maritime environments.

@inproceedings{AnastasiouECC25, author = {Anastasiou, Andreas and Papaioannou, Savvas and Kolios, Panayiotis and Panayiotou, Christos G.}, booktitle = {2025 European Control Conference (ECC)}, title = {Multiple Target Tracking Using a UAV Swarm in Maritime Environments}, year = {2025}, volume = {}, number = {}, pages = {2167-2172}, doi = {10.23919/ECC65951.2025.11187064}, } -

Adaptive Out-of-Control Point Pattern Detection in Sequential Random Finite Set ObservationsKonstantinos Bourazas, Savvas Papaioannou, and Panayiotis KoliosIn 2025 European Control Conference (ECC), 2025

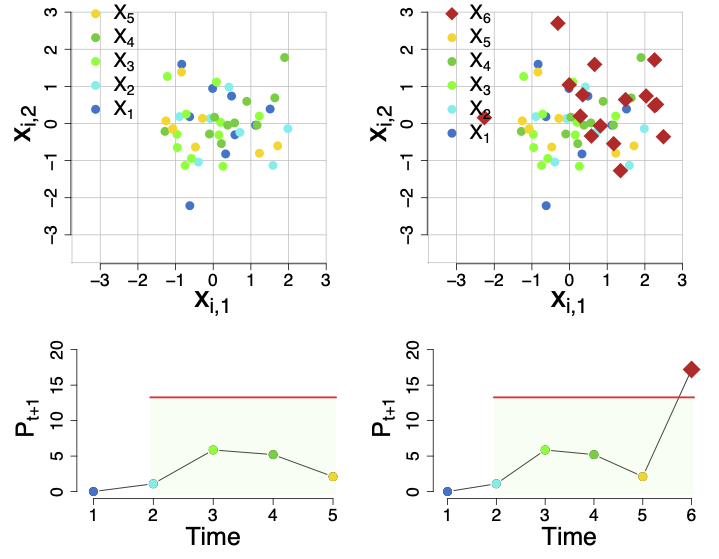

Adaptive Out-of-Control Point Pattern Detection in Sequential Random Finite Set ObservationsKonstantinos Bourazas, Savvas Papaioannou, and Panayiotis KoliosIn 2025 European Control Conference (ECC), 2025In this work we introduce a novel adaptive anomaly detection framework specifically designed for monitoring sequential random finite set (RFS) observations. Our approach effectively distinguishes between in-control data (normal) and out-of-control data (anomalies) by detecting deviations from the expected statistical behavior of the process. The primary contributions of this study include the development of an innovative RFS-based framework that not only learns the normal behavior of the data-generating process online but also dynamically adapts to behavioral shifts to accurately identify abnormal point patterns. To achieve this, we introduce a new class of RFS-based posterior distributions, named Power Discounting Posteriors (PD), which facilitate adaptation to systematic changes in data while enabling anomaly detection of point pattern data through a novel predictive posterior density function. The effectiveness of the proposed approach is demonstrated by extensive qualitative and quantitative simulation experiments.

@inproceedings{BourazasECC25, author = {Bourazas, Konstantinos and Papaioannou, Savvas and Kolios, Panayiotis}, booktitle = {2025 European Control Conference (ECC)}, title = {Adaptive Out-of-Control Point Pattern Detection in Sequential Random Finite Set Observations}, year = {2025}, volume = {}, number = {}, pages = {1549-1556}, doi = {10.23919/ECC65951.2025.11187123}, } -

Data-Driven Predictive Planning and Control for Aerial 3D Inspection with Back-face EliminationSavvas Papaioannou, Panayiotis Kolios, Christos G Panayiotou, and Marios M PolycarpouIn 2025 European Control Conference (ECC), 2025

Data-Driven Predictive Planning and Control for Aerial 3D Inspection with Back-face EliminationSavvas Papaioannou, Panayiotis Kolios, Christos G Panayiotou, and Marios M PolycarpouIn 2025 European Control Conference (ECC), 2025Automated inspection with Unmanned Aerial Systems (UASs) is a transformative capability set to revolutionize various application domains. However, this task is inherently complex, as it demands the seamless integration of perception, planning, and control which existing approaches often treat separately. Moreover, it requires accurate long-horizon planning to predict action sequences, in contrast to many current techniques, which tend to be myopic. To overcome these limitations, we propose a 3D inspection approach that unifies perception, planning, and control within a single data-driven predictive control framework. Unlike traditional methods that rely on known UAS dynamic models, our approach requires only input-output data, making it easily applicable to off-the-shelf black-box UASs. Our method incorporates back-face elimination, a visibility determination technique from 3D computer graphics, directly into the control loop, thereby enabling the online generation of accurate, long-horizon 3D inspection trajectories.

@inproceedings{PapaioannouECC25, author = {Papaioannou, Savvas and Kolios, Panayiotis and Panayiotou, Christos G and Polycarpou, Marios M}, booktitle = {2025 European Control Conference (ECC)}, title = {Data-Driven Predictive Planning and Control for Aerial 3D Inspection with Back-face Elimination}, year = {2025}, volume = {}, number = {}, pages = {2160-2166}, doi = {10.23919/ECC65951.2025.11186919}, }

2024

-

Probabilistically Robust Trajectory Planning of Multiple Aerial AgentsChristian Vitale, Savvas Papaioannou, Panayiotis Kolios, and Georgios EllinasIn 2024 18th International Conference on Control, Automation, Robotics and Vision (ICARCV), 2024

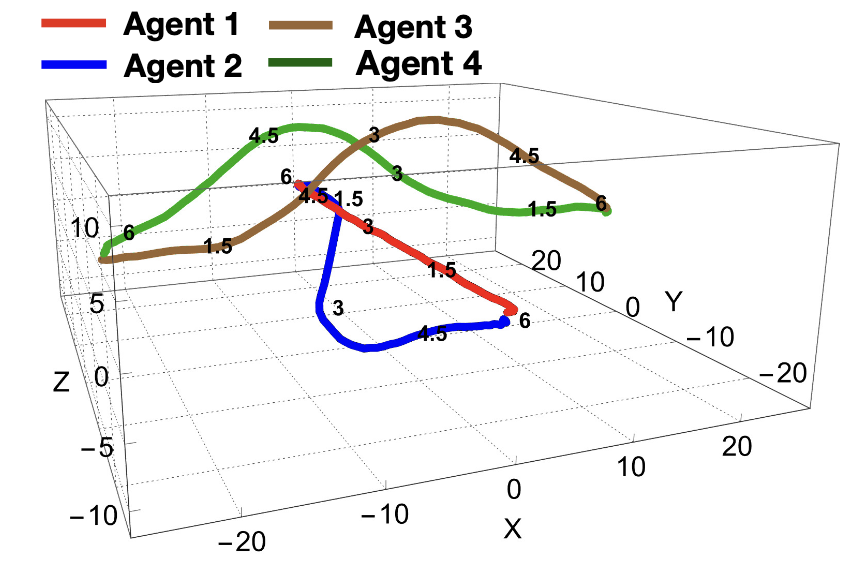

Probabilistically Robust Trajectory Planning of Multiple Aerial AgentsChristian Vitale, Savvas Papaioannou, Panayiotis Kolios, and Georgios EllinasIn 2024 18th International Conference on Control, Automation, Robotics and Vision (ICARCV), 2024Current research on robust trajectory planning for autonomous agents aims to mitigate uncertainties arising from disturbances and modeling errors while ensuring guaranteed safety. Existing methods primarily utilize stochastic optimal control techniques with chance constraints to maintain a minimum distance among agents with a guaranteed probability. However, these approaches face challenges, such as the use of simplifying assumptions that result in linear system models or Gaussian disturbances, which limit their practicality in complex realistic scenarios. To address these limitations, this work introduces a novel probabilistically robust distributed controller enabling autonomous agents to plan safe trajectories, even under non-Gaussian uncertainty and nonlinear systems. Leveraging exact uncertainty propagation techniques based on mixed-trigonometric-polynomial moment propagation, this method transforms non-Gaussian chance constraints into deterministic ones, seamlessly integrating them into a distributed model predictive control framework solvable with standard optimization tools. Simulation results demonstrate the effectiveness of this technique, highlighting its ability to consistently handle various types of uncertainty, ensuring robust and accurate path planning in complex scenarios.

@inproceedings{icarcv2024, author = {Vitale, Christian and Papaioannou, Savvas and Kolios, Panayiotis and Ellinas, Georgios}, booktitle = {2024 18th International Conference on Control, Automation, Robotics and Vision (ICARCV)}, title = {Probabilistically Robust Trajectory Planning of Multiple Aerial Agents}, year = {2024}, volume = {}, number = {}, pages = {852-859}, doi = {10.1109/ICARCV63323.2024.10821544}, } -

Automated Real-Time Inspection in Indoor and Outdoor 3D Environments with Cooperative Aerial RobotsAndreas Anastasiou, Angelos Zacharia, Savvas Papaioannou, Panayiotis Kolios, Christos G. Panayiotou, and Marios M. PolycarpouIn 2024 International Conference on Unmanned Aircraft Systems (ICUAS), 2024

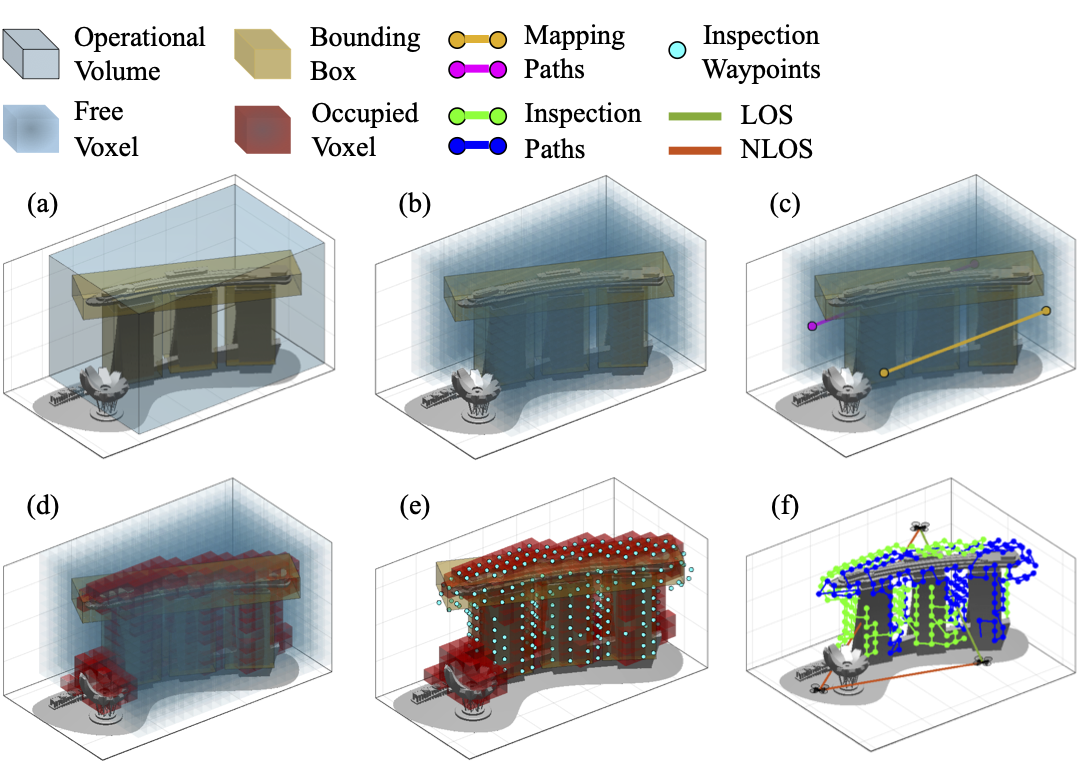

Automated Real-Time Inspection in Indoor and Outdoor 3D Environments with Cooperative Aerial RobotsAndreas Anastasiou, Angelos Zacharia, Savvas Papaioannou, Panayiotis Kolios, Christos G. Panayiotou, and Marios M. PolycarpouIn 2024 International Conference on Unmanned Aircraft Systems (ICUAS), 2024This work introduces a cooperative inspection system designed to efficiently control and coordinate a team of distributed heterogeneous UAV agents for the inspection of 3D structures in cluttered, unknown spaces. Our proposed approach employs a two-stage innovative methodology. Initially, it leverages the complementary sensing capabilities of the robots to cooperatively map the unknown environment. It then generates optimized, collision-free inspection paths, thereby ensuring comprehensive coverage of the structure’s surface area. The effectiveness of our system is demonstrated through qualitative and quantitative results from extensive Gazebo-based simulations that closely replicate real-world inspection scenarios, highlighting its ability to thoroughly inspect real-world-like 3D structures

@inproceedings{icuas2024, author = {Anastasiou, Andreas and Zacharia, Angelos and Papaioannou, Savvas and Kolios, Panayiotis and Panayiotou, Christos G. and Polycarpou, Marios M.}, booktitle = {2024 International Conference on Unmanned Aircraft Systems (ICUAS)}, title = {Automated Real-Time Inspection in Indoor and Outdoor 3D Environments with Cooperative Aerial Robots}, year = {2024}, doi = {10.1109/ICUAS60882.2024.10557006}, } -

Synergising Human-like Responses and Machine Intelligence for Planning in Disaster ResponseSavvas Papaioannou, Panayiotis Kolios, Christos G. Panayiotou, and Marios M. PolycarpouIn 2024 International Joint Conference on Neural Networks (IJCNN), 2024

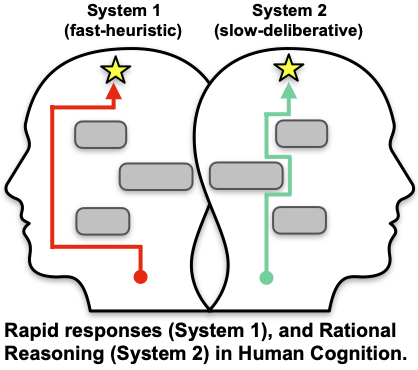

Synergising Human-like Responses and Machine Intelligence for Planning in Disaster ResponseSavvas Papaioannou, Panayiotis Kolios, Christos G. Panayiotou, and Marios M. PolycarpouIn 2024 International Joint Conference on Neural Networks (IJCNN), 2024In the rapidly changing environments of disaster response, planning and decision-making for autonomous agents involve complex and interdependent choices. Although recent advancements have improved traditional artificial intelligence (AI) approaches, they often struggle in such settings, particularly when applied to agents operating outside their well-defined training parameters. To address these challenges, we propose an attention-based cognitive architecture inspired by Dual Process Theory (DPT). This framework integrates, in an online fashion, rapid yet heuristic (human-like) responses (System 1) with the slow but optimized planning capabilities of machine intelligence (System 2). We illustrate how a supervisory controller can dynamically determine in real-time the engagement of either system to optimize mission objectives by assessing their performance across a number of distinct attributes. Evaluated for trajectory planning in dynamic environments, our framework demonstrates that this synergistic integration effectively manages complex tasks by optimizing multiple mission objectives.

@inproceedings{wcci2024, author = {Papaioannou, Savvas and Kolios, Panayiotis and Panayiotou, Christos G. and Polycarpou, Marios M.}, booktitle = {2024 International Joint Conference on Neural Networks (IJCNN)}, title = {Synergising Human-like Responses and Machine Intelligence for Planning in Disaster Response}, year = {2024}, volume = {}, number = {}, pages = {1-8}, doi = {10.1109/IJCNN60899.2024.10651466}, } -

Hierarchical Fault-Tolerant Coverage Control for an Autonomous Aerial AgentSavvas Papaioannou, Christian Vitale, Panayiotis Kolios, Christos G. Panayiotou, and Marios M. PolycarpouIn 12th IFAC Symposium on Fault Detection, Supervision and Safety for Technical Processes (SAFEPROCESS 2024), 2024

Hierarchical Fault-Tolerant Coverage Control for an Autonomous Aerial AgentSavvas Papaioannou, Christian Vitale, Panayiotis Kolios, Christos G. Panayiotou, and Marios M. PolycarpouIn 12th IFAC Symposium on Fault Detection, Supervision and Safety for Technical Processes (SAFEPROCESS 2024), 2024Fault-tolerant coverage control involves determining a trajectory that enables an autonomous agent to cover specific points of interest, even in the presence of actuation and/or sensing faults. In this work, the agent encounters control inputs that are erroneous; specifically, its nominal controls inputs are perturbed by stochastic disturbances, potentially disrupting its intended operation. Existing techniques have focused on deterministically bounded disturbances or relied on the assumption of Gaussian disturbances, whereas non-Gaussian disturbances have been primarily been tackled via scenario-based stochastic control methods. However, the assumption of Gaussian disturbances is generally limited to linear systems, and scenario-based methods can become computationally prohibitive. To address these limitations, we propose a hierarchical coverage controller that integrates mixed-trigonometric-polynomial moment propagation to propagate non-Gaussian disturbances through the agent’s nonlinear dynamics. Specifically, the first stage generates an ideal reference plan by optimising the agent’s mobility and camera control inputs. The second-stage fault-tolerant controller then aims to follow this reference plan, even in the presence of erroneous control inputs caused by non-Gaussian disturbances. This is achieved by imposing a set of deterministic constraints on the moments of the system’s uncertain states.

@inproceedings{safeprocess2024, title = {Hierarchical Fault-Tolerant Coverage Control for an Autonomous Aerial Agent}, author = {Papaioannou, Savvas and Vitale, Christian and Kolios, Panayiotis and Panayiotou, Christos G. and Polycarpou, Marios M.}, booktitle = {12th IFAC Symposium on Fault Detection, Supervision and Safety for Technical Processes (SAFEPROCESS 2024)}, volume = {58}, number = {4}, pages = {532-537}, year = {2024}, issn = {2405-8963}, doi = {https://doi.org/10.1016/j.ifacol.2024.07.273}, }

2023

-

Distributed Search Planning in 3-D Environments With a Dynamically Varying Number of AgentsSavvas Papaioannou, Panayiotis Kolios, Theocharis Theocharides, Christos G. Panayiotou, and Marios M. PolycarpouIEEE Transactions on Systems, Man, and Cybernetics: Systems, 2023

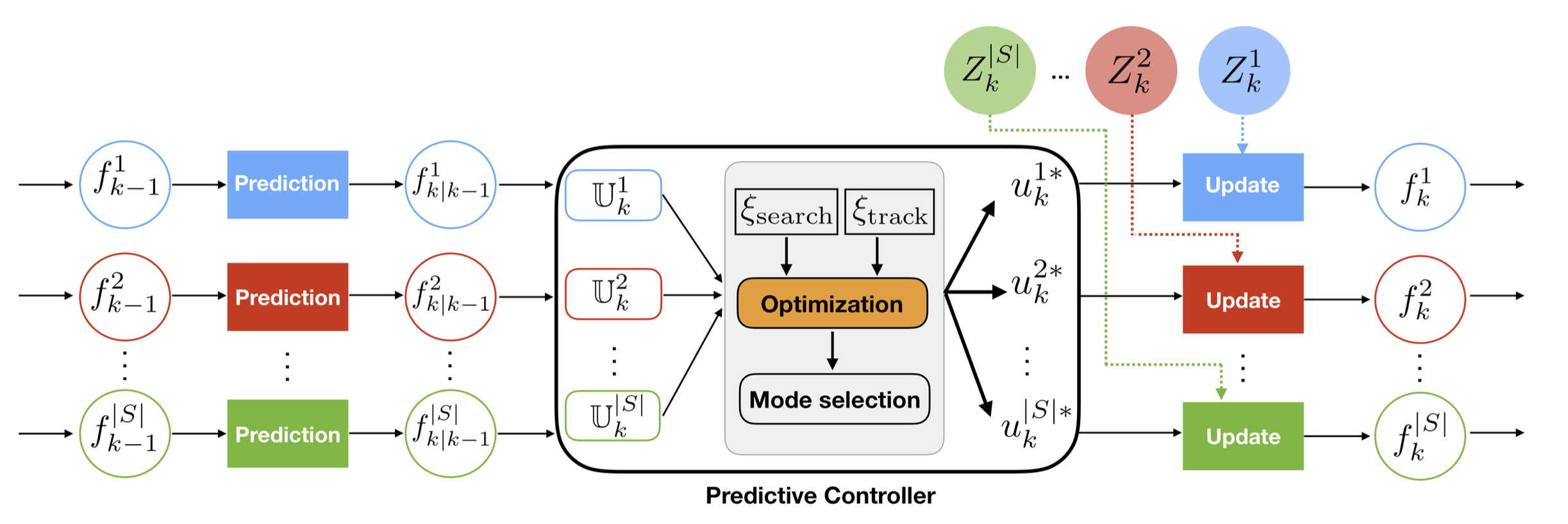

Distributed Search Planning in 3-D Environments With a Dynamically Varying Number of AgentsSavvas Papaioannou, Panayiotis Kolios, Theocharis Theocharides, Christos G. Panayiotou, and Marios M. PolycarpouIEEE Transactions on Systems, Man, and Cybernetics: Systems, 2023In this work, a novel distributed search-planning framework is proposed, where a dynamically varying team of autonomous agents cooperate in order to search multiple objects of interest in three-dimension (3-D). It is assumed that the agents can enter and exit the mission space at any point in time, and as a result the number of agents that actively participate in the mission varies over time. The proposed distributed search-planning framework takes into account the agent dynamical and sensing model, and the dynamically varying number of agents, and utilizes model predictive control (MPC) to generate cooperative search trajectories over a finite rolling planning horizon. This enables the agents to adapt their decisions on-line while considering the plans of their peers, maximizing their search planning performance, and reducing the duplication of work.

@article{10044164, author = {Papaioannou, Savvas and Kolios, Panayiotis and Theocharides, Theocharis and Panayiotou, Christos G. and Polycarpou, Marios M.}, journal = {IEEE Transactions on Systems, Man, and Cybernetics: Systems}, title = {Distributed Search Planning in 3-D Environments With a Dynamically Varying Number of Agents}, year = {2023}, volume = {53}, number = {7}, pages = {4117-4130}, doi = {10.1109/TSMC.2023.3240023}, } -

Integrated Guidance and Gimbal Control for Coverage Planning With Visibility ConstraintsSavvas Papaioannou, Panayiotis Kolios, Theocharis Theocharides, Christos G. Panayiotou, and Marios M. PolycarpouIEEE Transactions on Aerospace and Electronic Systems, 2023

Integrated Guidance and Gimbal Control for Coverage Planning With Visibility ConstraintsSavvas Papaioannou, Panayiotis Kolios, Theocharis Theocharides, Christos G. Panayiotou, and Marios M. PolycarpouIEEE Transactions on Aerospace and Electronic Systems, 2023Coverage path planning with unmanned aerial vehicles (UAVs) is a core task for many services and applications including search and rescue, precision agriculture, infrastructure inspection and surveillance. This work proposes an integrated guidance and gimbal control coverage path planning (CPP) approach, in which the mobility and gimbal inputs of an autonomous UAV agent are jointly controlled and optimized to achieve full coverage of a given object of interest, according to a specified set of optimality criteria. The proposed approach uses a set of visibility constraints to integrate the physical behavior of sensor signals (i.e., camera-rays) into the coverage planning process, thus generating optimized coverage trajectories that take into account which parts of the scene are visible through the agent’s camera at any point in time. The integrated guidance and gimbal control CPP problem is posed in this work as a constrained optimal control problem which is then solved using mixed integer programming (MIP) optimization. Extensive numerical experiments demonstrate the effectiveness of the proposed approach.

@article{9861757, author = {Papaioannou, Savvas and Kolios, Panayiotis and Theocharides, Theocharis and Panayiotou, Christos G. and Polycarpou, Marios M.}, journal = {IEEE Transactions on Aerospace and Electronic Systems}, title = {Integrated Guidance and Gimbal Control for Coverage Planning With Visibility Constraints}, year = {2023}, volume = {59}, number = {2}, pages = {1276-1291}, doi = {10.1109/TAES.2022.3199196}, } -

Cooperative Receding Horizon 3D Coverage Control with a Team of Networked Aerial AgentsSavvas Papaioannou, Panayiotis Kolios, Theocharis Theocharides, Christos G. Panayiotou, and Marios M. PolycarpouIn 2023 IEEE 62st Conference on Decision and Control (CDC), 2023

Cooperative Receding Horizon 3D Coverage Control with a Team of Networked Aerial AgentsSavvas Papaioannou, Panayiotis Kolios, Theocharis Theocharides, Christos G. Panayiotou, and Marios M. PolycarpouIn 2023 IEEE 62st Conference on Decision and Control (CDC), 2023This work proposes a receding horizon coverage control approach which allows multiple autonomous aerial agents to work cooperatively in order cover the total surface area of a 3D object of interest. The cooperative coverage problem which is posed in this work as an optimal control problem, jointly optimizes the agents’ kinematic and camera control inputs, while considering coupling constraints amongst the team of agents which aim at minimizing the duplication of work. To generate look-ahead coverage trajectories over a finite planning horizon, the proposed approach integrates visibility constraints into the proposed coverage controller in order to determine the visible part of the object with respect to the agents’ future states. In particular, we show how non-linear and non-convex visibility determination constraints can be transformed into logical constraints which can easily be embedded into a mixed integer optimization program.

@inproceedings{cdc2023, author = {Papaioannou, Savvas and Kolios, Panayiotis and Theocharides, Theocharis and Panayiotou, Christos G. and Polycarpou, Marios M.}, booktitle = {2023 IEEE 62st Conference on Decision and Control (CDC)}, title = {Cooperative Receding Horizon 3D Coverage Control with a Team of Networked Aerial Agents}, year = {2023}, volume = {}, number = {}, pages = {4399-4404}, doi = {10.1109/CDC49753.2023.10383310}, } -

Unscented Optimal Control for 3D Coverage Planning with an Autonomous UAV AgentSavvas Papaioannou, Panayiotis Kolios, Theocharis Theocharides, Christos G. Panayiotou, and Marios M. PolycarpouIn 2023 International Conference on Unmanned Aircraft Systems (ICUAS), 2023

Unscented Optimal Control for 3D Coverage Planning with an Autonomous UAV AgentSavvas Papaioannou, Panayiotis Kolios, Theocharis Theocharides, Christos G. Panayiotou, and Marios M. PolycarpouIn 2023 International Conference on Unmanned Aircraft Systems (ICUAS), 2023We propose a novel probabilistically robust controller for the guidance of an unmanned aerial vehicle (UAV) in coverage planning missions, which can simultaneously optimize both the UAV’s motion, and camera control inputs for the 3D coverage of a given object of interest. Specifically, the coverage planning problem is formulated in this work as an optimal control problem with logical constraints to enable the UAV agent to jointly: a) select a series of discrete camera field-of-view states which satisfy a set of coverage constraints, and b) optimize its motion control inputs according to a specified mission objective. We show how this hybrid optimal control problem can be solved with standard optimization tools by converting the logical expressions in the constraints into equality/inequality constraints involving only continuous variables. Finally, probabilistic robustness is achieved by integrating the unscented transformation to the proposed controller, thus enabling the design of robust open-loop coverage plans which take into account the future posterior distribution of the UAV’s state inside the planning horizon.

@inproceedings{10156482, author = {Papaioannou, Savvas and Kolios, Panayiotis and Theocharides, Theocharis and Panayiotou, Christos G. and Polycarpou, Marios M.}, booktitle = {2023 International Conference on Unmanned Aircraft Systems (ICUAS)}, title = {Unscented Optimal Control for 3D Coverage Planning with an Autonomous UAV Agent}, year = {2023}, volume = {}, number = {}, pages = {703-712}, doi = {10.1109/ICUAS57906.2023.10156482}, } -

Joint Estimation and Control for Multi-Target Passive Monitoring with an Autonomous UAV AgentSavvas Papaioannou, Christos Laoudias, Panayiotis Kolios, Theocharis Theocharides, and Christos G. PanayiotouIn 2023 31st Mediterranean Conference on Control and Automation (MED), 2023

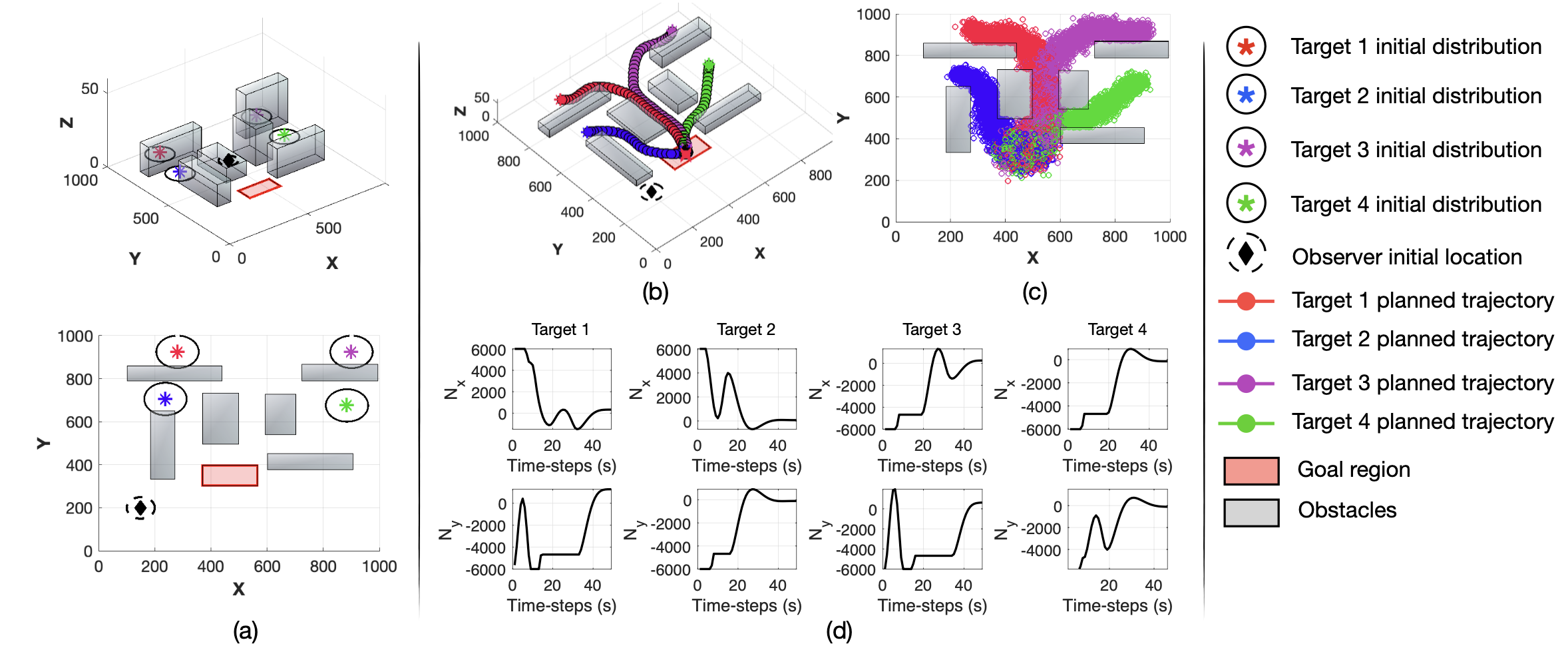

Joint Estimation and Control for Multi-Target Passive Monitoring with an Autonomous UAV AgentSavvas Papaioannou, Christos Laoudias, Panayiotis Kolios, Theocharis Theocharides, and Christos G. PanayiotouIn 2023 31st Mediterranean Conference on Control and Automation (MED), 2023This work considers the problem of passively monitoring multiple moving targets with a single unmanned aerial vehicle (UAV) agent equipped with a direction-finding radar. This is in general a challenging problem due to the unobservability of the target states, and the highly non-linear measurement process. In addition to these challenges, in this work we also consider: a) environments with multiple obstacles where the targets need to be tracked as they manoeuvre through the obstacles, and b) multiple false-alarm measurements caused by the cluttered environment. To address these challenges we first design a model predictive guidance controller which is used to plan hypothetical target trajectories over a rolling finite planning horizon. We then formulate a joint estimation and control problem where the trajectory of the UAV agent is optimized to achieve optimal multi-target monitoring.

@inproceedings{10185768, author = {Papaioannou, Savvas and Laoudias, Christos and Kolios, Panayiotis and Theocharides, Theocharis and Panayiotou, Christos G.}, booktitle = {2023 31st Mediterranean Conference on Control and Automation (MED)}, title = {Joint Estimation and Control for Multi-Target Passive Monitoring with an Autonomous UAV Agent}, year = {2023}, volume = {}, number = {}, pages = {176-181}, doi = {10.1109/MED59994.2023.10185768}, } -

Distributed Control for 3D Inspection using Multi-UAV SystemsAngelos Zacharia, Savvas Papaioannou, Panayiotis Kolios, and Christos PanayiotouIn 2023 31st Mediterranean Conference on Control and Automation (MED), 2023

Distributed Control for 3D Inspection using Multi-UAV SystemsAngelos Zacharia, Savvas Papaioannou, Panayiotis Kolios, and Christos PanayiotouIn 2023 31st Mediterranean Conference on Control and Automation (MED), 2023Cooperative control of multi-UAV systems has attracted substantial research attention due to its significance in various application sectors such as emergency response, search and rescue missions, and critical infrastructure inspection. This paper proposes a distributed control algorithm to generate collision-free trajectories that drive the multi-UAV system to completely inspect a set of 3D points on the surface of an object of interest. The objective of the UAVs is to cooperatively inspect the object of interest in the minimum amount of time. Extensive numerical simulations for a team of quadrotor UAVs inspecting a real 3D structure illustrate the validity and effectiveness of the proposed approach.

@inproceedings{10185881, author = {Zacharia, Angelos and Papaioannou, Savvas and Kolios, Panayiotis and Panayiotou, Christos}, booktitle = {2023 31st Mediterranean Conference on Control and Automation (MED)}, title = {Distributed Control for 3D Inspection using Multi-UAV Systems}, year = {2023}, volume = {}, number = {}, pages = {164-169}, doi = {10.1109/MED59994.2023.10185881}, } -

Model Predictive Control For Multiple Castaway Tracking with an Autonomous Aerial AgentAndreas Anastasiou, Savvas Papaioannou, Panayiotis Kolios, and Christos G. PanayiotouIn 2023 European Control Conference (ECC), 2023

Model Predictive Control For Multiple Castaway Tracking with an Autonomous Aerial AgentAndreas Anastasiou, Savvas Papaioannou, Panayiotis Kolios, and Christos G. PanayiotouIn 2023 European Control Conference (ECC), 2023Over the past few years, a plethora of advancements in Unmanned Areal Vehicle (UAV) technology has paved the way for UAV-based Search and Rescue (SAR) operations with transformative impact to the outcome of critical life-saving missions. This paper dives into the challenging task of multiple castaway tracking using an autonomous UAV agent. Leveraging on the computing power of the modern embedded devices, we propose a Model Predictive Control (MPC) framework for tracking multiple castaways assumed to drift afloat in the aftermath of a maritime accident. We consider a stationary radar sensor that is responsible for signaling the search mission by providing noisy measurements of each castaway’s initial state. The UAV agent aims at detecting and tracking the moving targets with its equipped onboard camera sensor that has limited sensing range. In this work, we also experimentally determine the probability of target detection from real-world data by training and evaluating various Convolutional Neural Networks (CNNs). Extensive qualitative and quantitative evaluations demonstrate the performance of the proposed approach.

@inproceedings{10178187, author = {Anastasiou, Andreas and Papaioannou, Savvas and Kolios, Panayiotis and Panayiotou, Christos G.}, booktitle = {2023 European Control Conference (ECC)}, title = {Model Predictive Control For Multiple Castaway Tracking with an Autonomous Aerial Agent}, year = {2023}, volume = {}, number = {}, pages = {1-8}, doi = {10.23919/ECC57647.2023.10178187}, }

2022

-

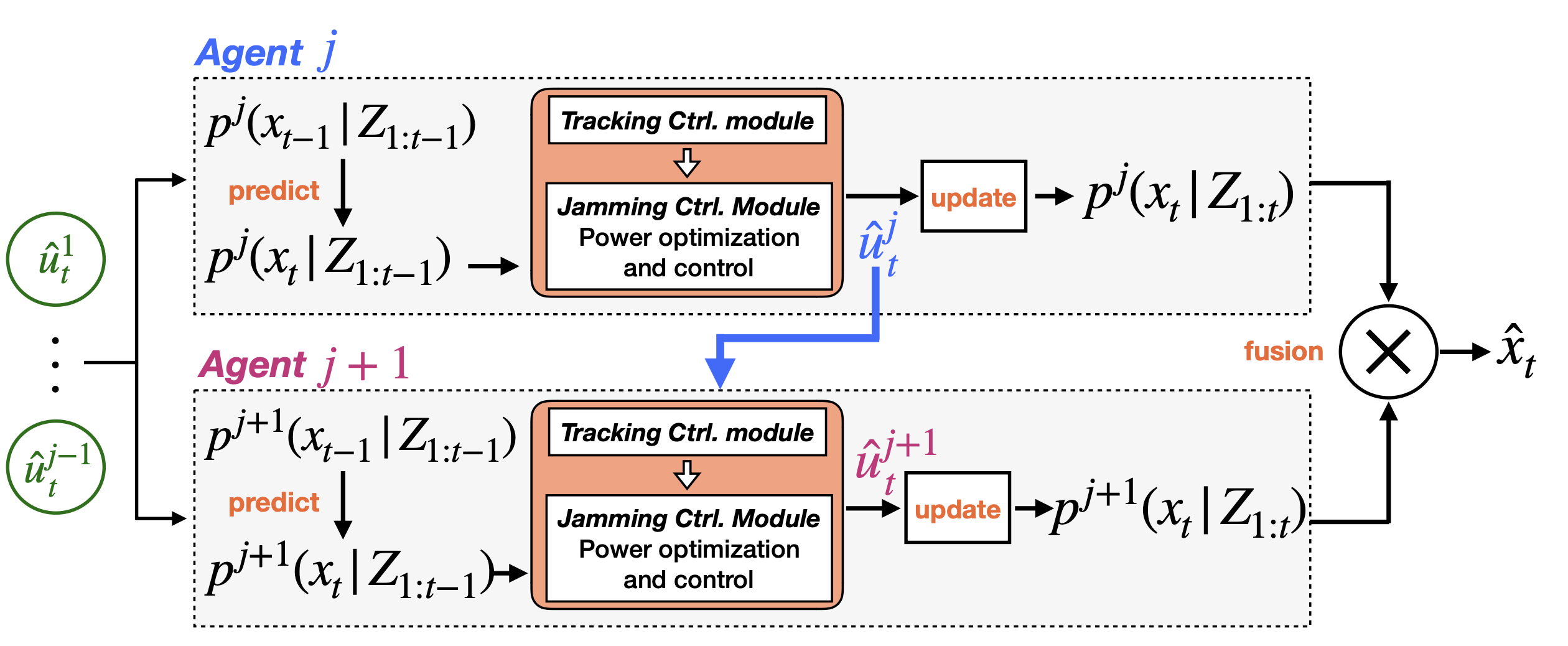

Distributed Estimation and Control for Jamming an Aerial Target With Multiple AgentsSavvas Papaioannou, Panayiotis Kolios, and Georgios EllinasIEEE Transactions on Mobile Computing, 2022

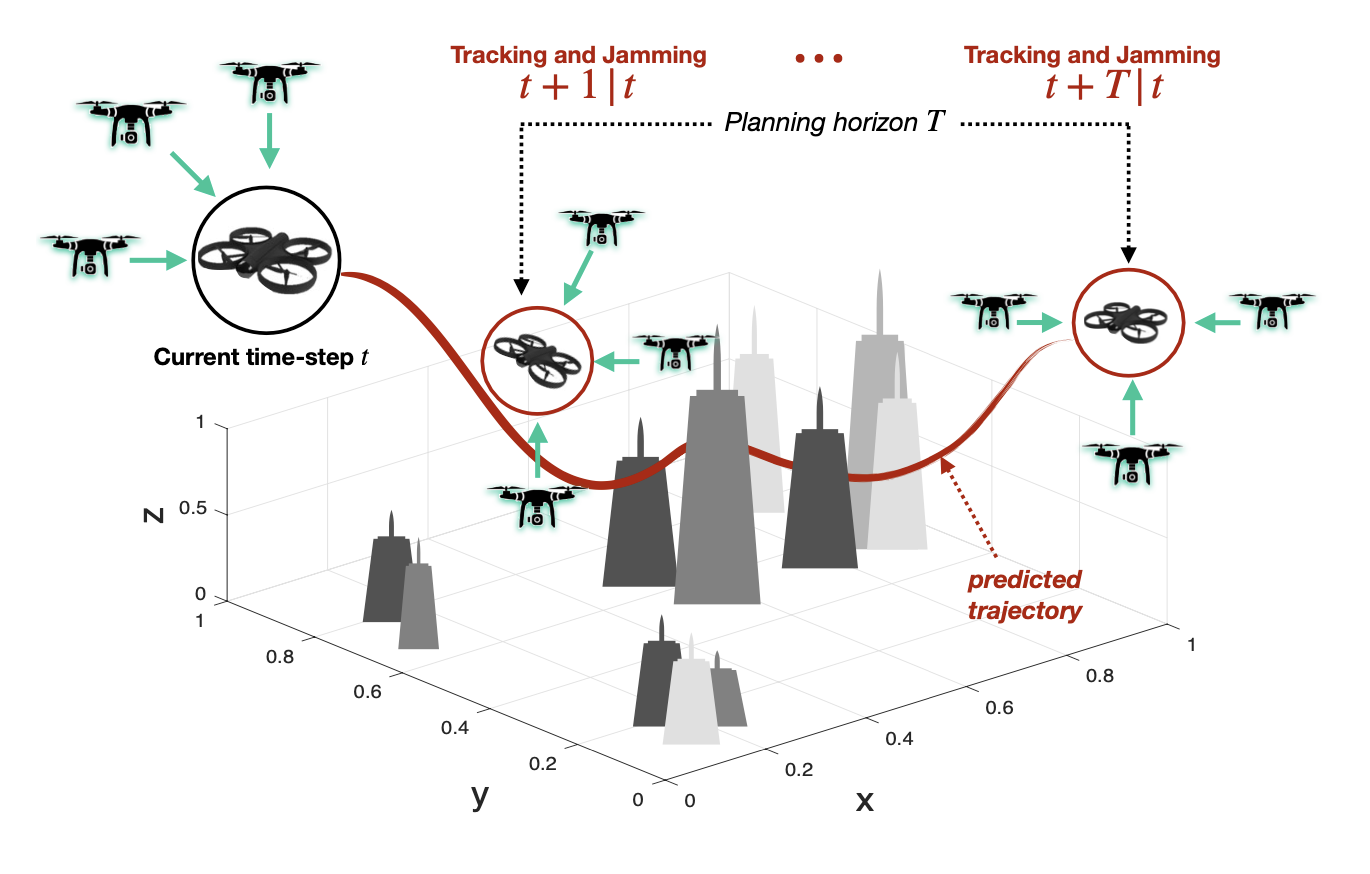

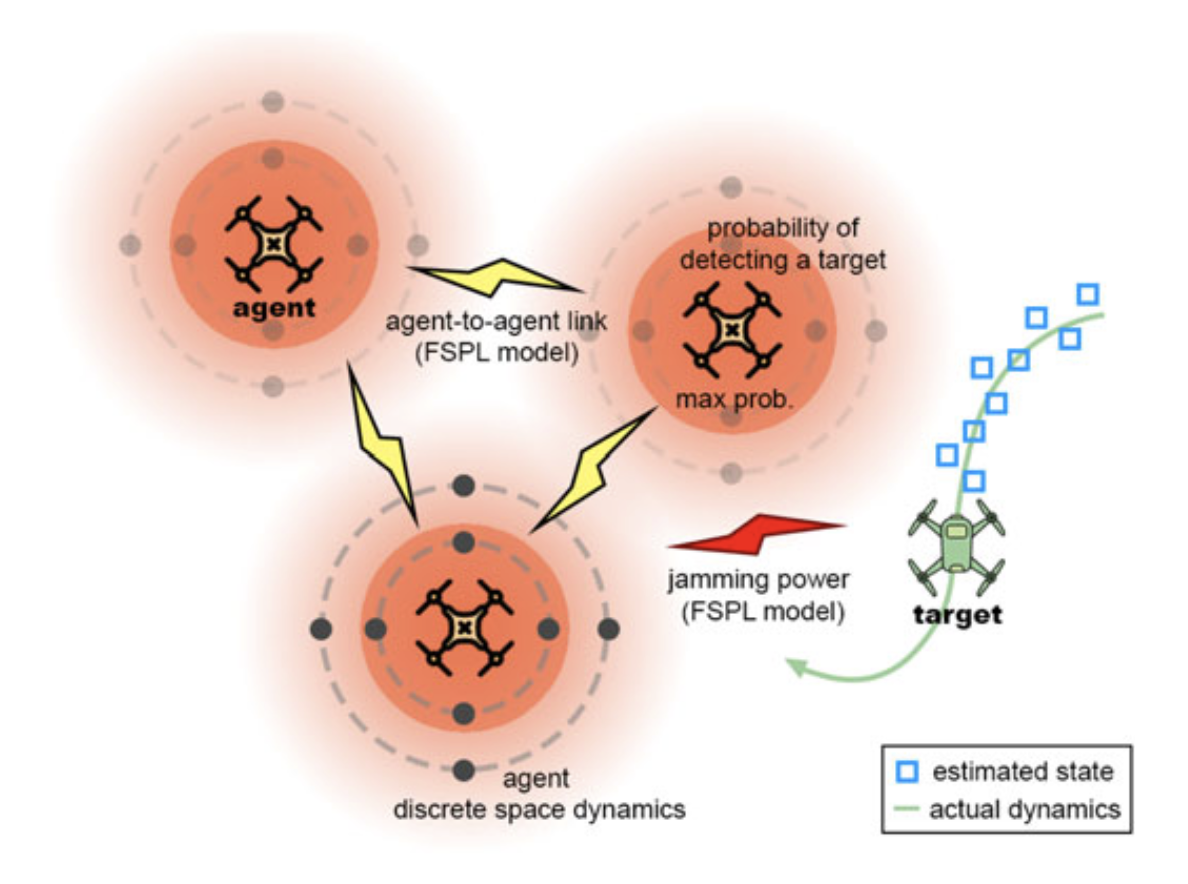

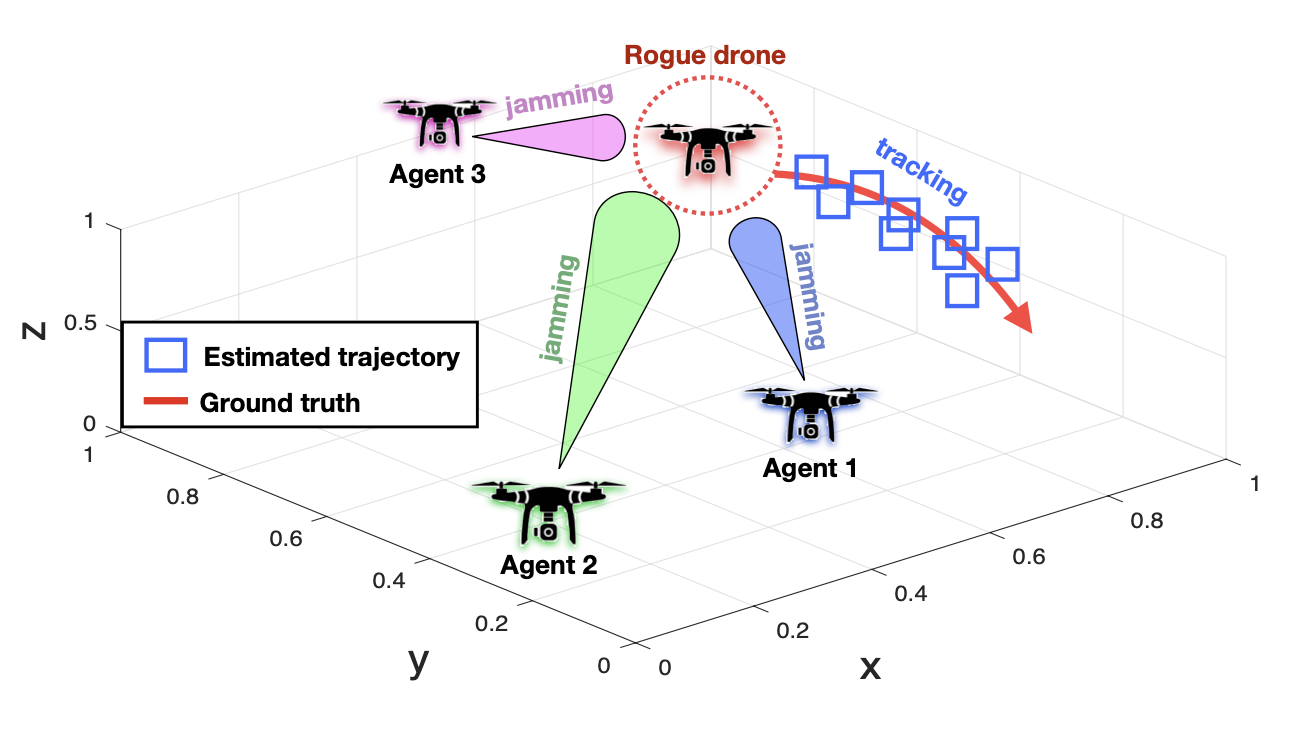

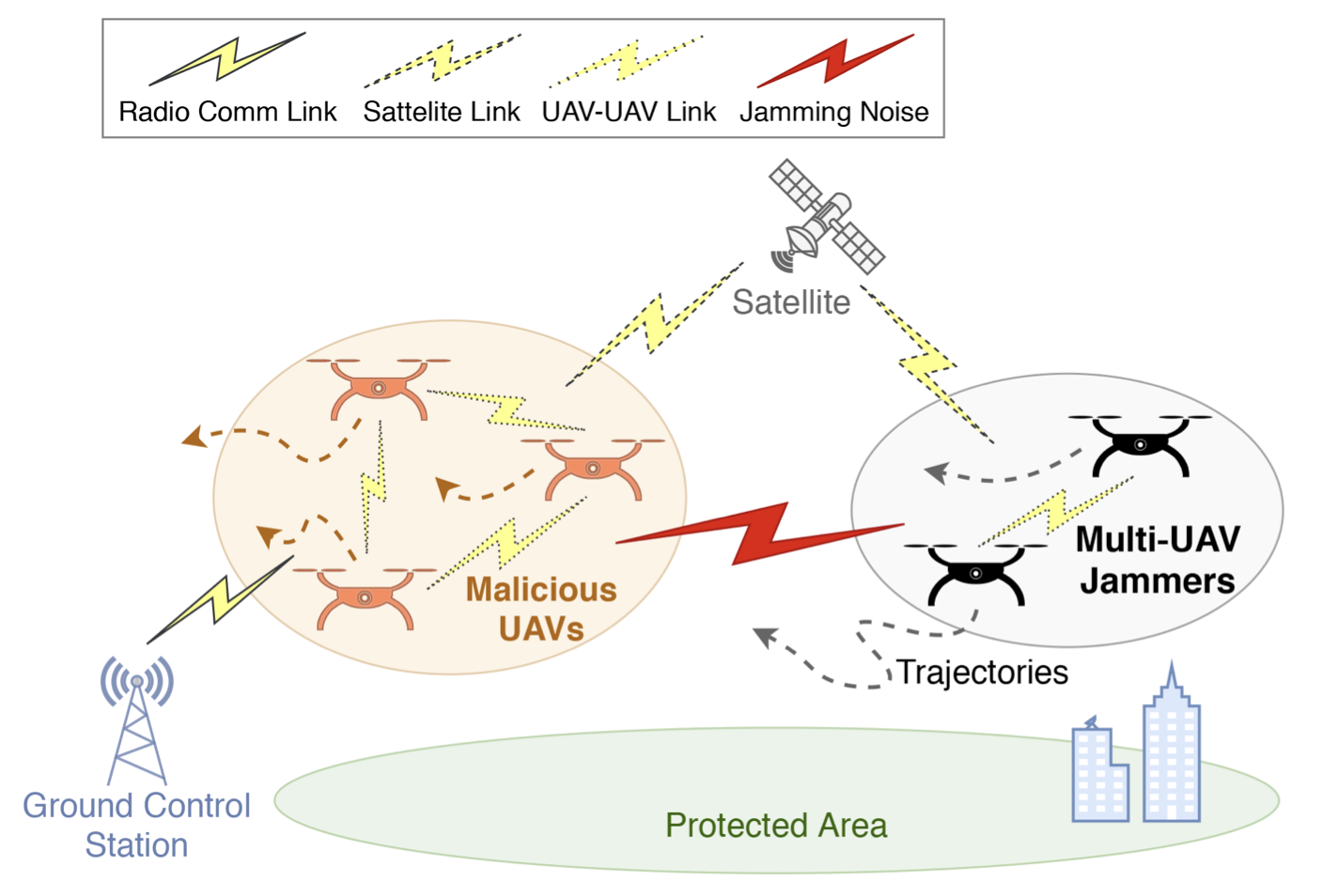

Distributed Estimation and Control for Jamming an Aerial Target With Multiple AgentsSavvas Papaioannou, Panayiotis Kolios, and Georgios EllinasIEEE Transactions on Mobile Computing, 2022This work proposes a distributed estimation and control approach in which a team of aerial agents equipped with radio jamming devices collaborate in order to intercept and concurrently track-and-jam a malicious target, while at the same time minimizing the induced jamming interference amongst the team. Specifically, it is assumed that the malicious target maneuvers in 3D space, avoiding collisions with obstacles and other 3D structures in its way, according to a stochastic dynamical model. Based on this, a track-and-jam control approach is proposed which allows a team of distributed aerial agents to decide their control actions online, over a finite planning horizon, to achieve uninterrupted radio-jamming and tracking of the malicious target, in the presence of jamming interference constraints. The proposed approach is formulated as a distributed model predictive control (MPC) problem and is solved using mixed integer quadratic programming (MIQP). Extensive evaluation of the system’s performance validates the applicability of the proposed approach in challenging scenarios with uncertain target dynamics, noisy measurements, and in the presence of obstacles.

@article{9894723, author = {Papaioannou, Savvas and Kolios, Panayiotis and Ellinas, Georgios}, journal = {IEEE Transactions on Mobile Computing}, title = {Distributed Estimation and Control for Jamming an Aerial Target With Multiple Agents}, year = {2022}, volume = {}, number = {}, pages = {1-15}, doi = {10.1109/TMC.2022.3207589}, } -

Autonomous 4D Trajectory Planning for Dynamic and Flexible Air Traffic ManagementChristian Vitale, Savvas Papaioannou, Panayiotis Kolios, and Georgios EllinasJournal of Intelligent & Robotic Systems, 2022

Autonomous 4D Trajectory Planning for Dynamic and Flexible Air Traffic ManagementChristian Vitale, Savvas Papaioannou, Panayiotis Kolios, and Georgios EllinasJournal of Intelligent & Robotic Systems, 2022With an ever increasing number of unmanned aerial vehicles (UAVs) in flight, there is a pressing need for scalable and dynamic air traffic management solutions that ensure efficient use of the airspace while maintaining safety and avoiding mid-air collisions. To address this need, a novel framework is developed for computing optimized 4D trajectories for UAVs that ensure dynamic and flexible use of the airspace, while maximizing the available capacity through the minimization of the aggregate traveling times. Specifically, a network manager (NM) is utilized that considers UAV requests (including start/target locations) and addresses inherent mobility uncertainties using a linear-Gaussian system, to compute efficient and safe trajectories. Through the proposed framework, a family of mathematical programming problems is derived to compute control profiles for both distributed and centralized implementations. Extensive simulation results are presented to demonstrate the applicability of the proposed framework to maximize air traffic throughput under probabilistic collision avoidance guarantees.

@article{vitale2022autonomous, title = {{Autonomous 4D Trajectory Planning for Dynamic and Flexible Air Traffic Management}}, author = {Vitale, Christian and Papaioannou, Savvas and Kolios, Panayiotis and Ellinas, Georgios}, journal = {Journal of Intelligent \& Robotic Systems}, volume = {106}, number = {1}, pages = {11}, year = {2022}, publisher = {Springer}, doi = {10.1007/s10846-022-01715-z}, } -

Multi-Agent Coordinated Close-in Jamming for Disabling a Rogue DronePanayiota Valianti, Savvas Papaioannou, Panayiotis Kolios, and Georgios EllinasIEEE Transactions on Mobile Computing, 2022

Multi-Agent Coordinated Close-in Jamming for Disabling a Rogue DronePanayiota Valianti, Savvas Papaioannou, Panayiotis Kolios, and Georgios EllinasIEEE Transactions on Mobile Computing, 2022Drones, including remotely piloted aircraft or unmanned aerial vehicles, have become extremely appealing over the recent years, with a multitude of applications and usages. However, they can potentially present major threats for security and public safety, especially when they fly across critical infrastructures and public spaces. This work investigates a novel counter-drone solution by proposing a multi-agent framework in which a team of pursuer drones cooperate in order to track and jam a rogue drone. Within the proposed framework, a joint mobility and power control solution is developed to optimize the respective decisions of each cooperating agent in order to best track and intercept the moving rogue drone. Both centralized and distributed variants of the joint optimization problem are developed and extensive simulations are conducted to evaluate the performance of the problem variants and to demonstrate the effectiveness of the proposed solution.

@article{9363641, author = {Valianti, Panayiota and Papaioannou, Savvas and Kolios, Panayiotis and Ellinas, Georgios}, journal = {IEEE Transactions on Mobile Computing}, title = {Multi-Agent Coordinated Close-in Jamming for Disabling a Rogue Drone}, year = {2022}, volume = {21}, number = {10}, pages = {3700-3717}, doi = {10.1109/TMC.2021.3062225}, } -

Integrated Ray-Tracing and Coverage Planning Control using Reinforcement LearningSavvas Papaioannou, Panayiotis Kolios, Theocharis Theocharides, Christos G. Panayiotou, and Marios M. PolycarpouIn 2022 IEEE 61st Conference on Decision and Control (CDC), 2022

Integrated Ray-Tracing and Coverage Planning Control using Reinforcement LearningSavvas Papaioannou, Panayiotis Kolios, Theocharis Theocharides, Christos G. Panayiotou, and Marios M. PolycarpouIn 2022 IEEE 61st Conference on Decision and Control (CDC), 2022In this work we propose a coverage planning control approach which allows a mobile agent, equipped with a controllable sensor (i.e., a camera) with limited sensing domain (i.e., finite sensing range and angle of view), to cover the surface area of an object of interest. The proposed approach integrates ray-tracing into the coverage planning process, thus allowing the agent to identify which parts of the scene are visible at any point in time. The problem of integrated ray-tracing and coverage planning control is first formulated as a constrained optimal control problem (OCP), which aims at determining the agent’s optimal control inputs over a finite planning horizon, that minimize the coverage time. Efficiently solving the resulting OCP is however very challenging due to non-convex and nonlinear visibility constraints. To overcome this limitation, the problem is converted into a Markov decision process (MDP) which is then solved using reinforcement learning. In particular, we show that a controller which follows an optimal control law can be learned using off-policy temporal-difference control (i.e., Q-learning). Extensive numerical experiments demonstrate the effectiveness of the proposed approach for various configurations of the agent and the object of interest.

@inproceedings{9992360, author = {Papaioannou, Savvas and Kolios, Panayiotis and Theocharides, Theocharis and Panayiotou, Christos G. and Polycarpou, Marios M.}, booktitle = {2022 IEEE 61st Conference on Decision and Control (CDC)}, title = {Integrated Ray-Tracing and Coverage Planning Control using Reinforcement Learning}, year = {2022}, volume = {}, number = {}, pages = {7200-7207}, doi = {10.1109/CDC51059.2022.9992360}, } -

UAV-based Receding Horizon Control for 3D Inspection PlanningSavvas Papaioannou, Panayiotis Kolios, Theocharis Theocharides, Christos G. Panayiotou, and Marios M. PolycarpouIn 2022 International Conference on Unmanned Aircraft Systems (ICUAS), 2022

UAV-based Receding Horizon Control for 3D Inspection PlanningSavvas Papaioannou, Panayiotis Kolios, Theocharis Theocharides, Christos G. Panayiotou, and Marios M. PolycarpouIn 2022 International Conference on Unmanned Aircraft Systems (ICUAS), 2022Nowadays, unmanned aerial vehicles or UAVs are being used for a wide range of tasks, including infrastructure inspection, automated monitoring and coverage. This paper investigates the problem of 3D inspection planning with an autonomous UAV agent which is subject to dynamical and sensing constraints. We propose a receding horizon 3D inspection planning control approach for generating optimal trajectories which enable an autonomous UAV agent to inspect a finite number of feature-points scattered on the surface of a cuboid-like structure of interest. The inspection planning problem is formulated as a constrained open-loop optimal control problem and is solved using mixed integer programming (MIP) optimization. Quantitative and qualitative evaluation demonstrates the effectiveness of the proposed approach.

@inproceedings{9836051, author = {Papaioannou, Savvas and Kolios, Panayiotis and Theocharides, Theocharis and Panayiotou, Christos G. and Polycarpou, Marios M.}, booktitle = {2022 International Conference on Unmanned Aircraft Systems (ICUAS)}, title = {UAV-based Receding Horizon Control for 3D Inspection Planning}, year = {2022}, volume = {}, number = {}, pages = {1121-1130}, doi = {10.1109/ICUAS54217.2022.9836051}, }

2021

-

Towards Automated 3D Search Planning for Emergency Response MissionsSavvas Papaioannou, Panayiotis Kolios, Theocharis Theocharides, Christos G Panayiotou, and Marios M PolycarpouJournal of Intelligent & Robotic Systems, 2021

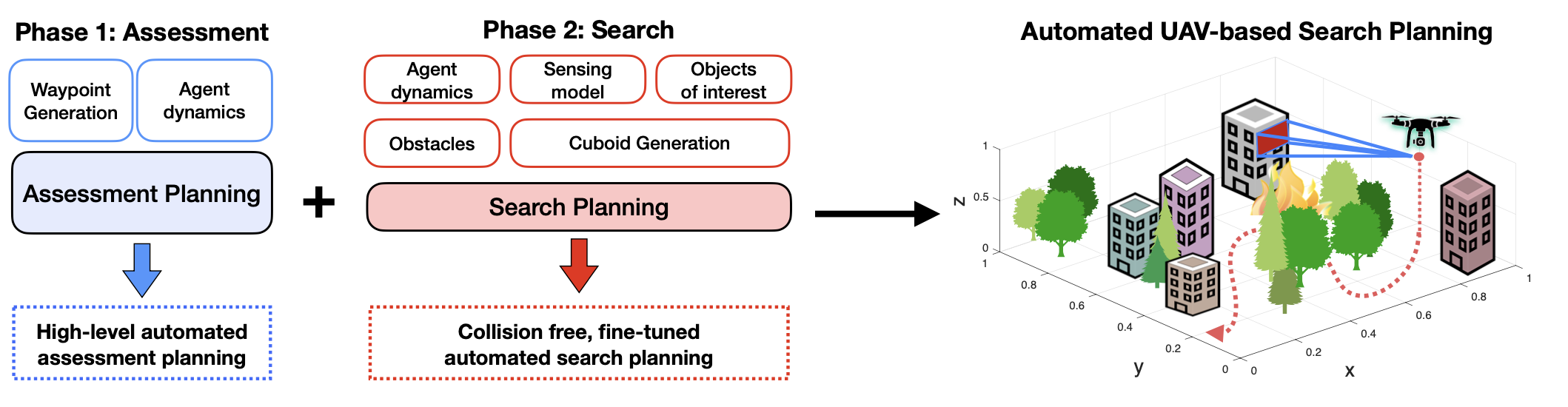

Towards Automated 3D Search Planning for Emergency Response MissionsSavvas Papaioannou, Panayiotis Kolios, Theocharis Theocharides, Christos G Panayiotou, and Marios M PolycarpouJournal of Intelligent & Robotic Systems, 2021The ability to efficiently plan and execute automated and precise search missions using unmanned aerial vehicles (UAVs) during emergency response situations is imperative. Precise navigation between obstacles and time-efficient searching of 3D structures and buildings are essential for locating survivors and people in need in emergency response missions. In this work we address this challenging problem by proposing a unified search planning framework that automates the process of UAV-based search planning in 3D environments. Specifically, we propose a novel search planning framework which enables automated planning and execution of collision-free search trajectories in 3D by taking into account low-level mission constrains (e.g., the UAV dynamical and sensing model), mission objectives (e.g., the mission execution time and the UAV energy efficiency) and user-defined mission specifications (e.g., the 3D structures to be searched and minimum detection probability constraints). The capabilities and performance of the proposed approach are demonstrated through extensive simulated 3D search scenarios.

@article{papaioannou2021towards, title = {{Towards Automated 3D Search Planning for Emergency Response Missions}}, author = {Papaioannou, Savvas and Kolios, Panayiotis and Theocharides, Theocharis and Panayiotou, Christos G and Polycarpou, Marios M}, journal = {Journal of Intelligent \& Robotic Systems}, volume = {103}, number = {1}, pages = {2}, year = {2021}, publisher = {Springer}, doi = {10.1007/s10846-021-01449-4}, } -

Deep Reinforcement Learning Multi-UAV Trajectory Control for Target TrackingJiseon Moon, Savvas Papaioannou, Christos Laoudias, Panayiotis Kolios, and Sunwoo KimIEEE Internet of Things Journal, 2021

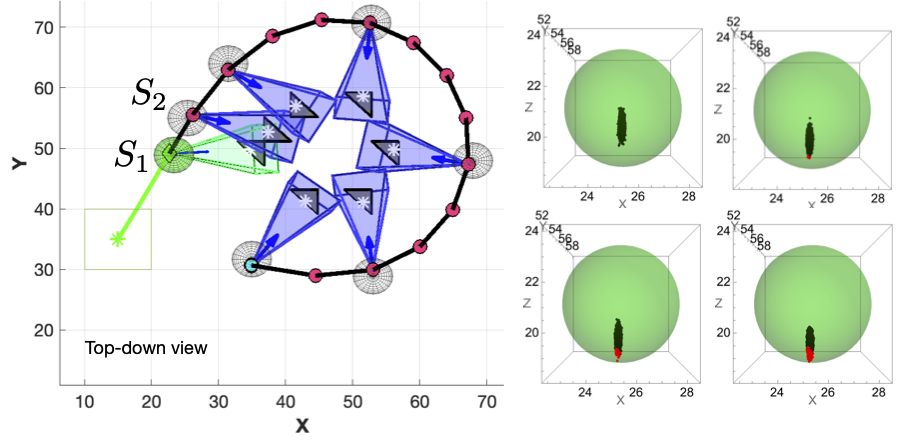

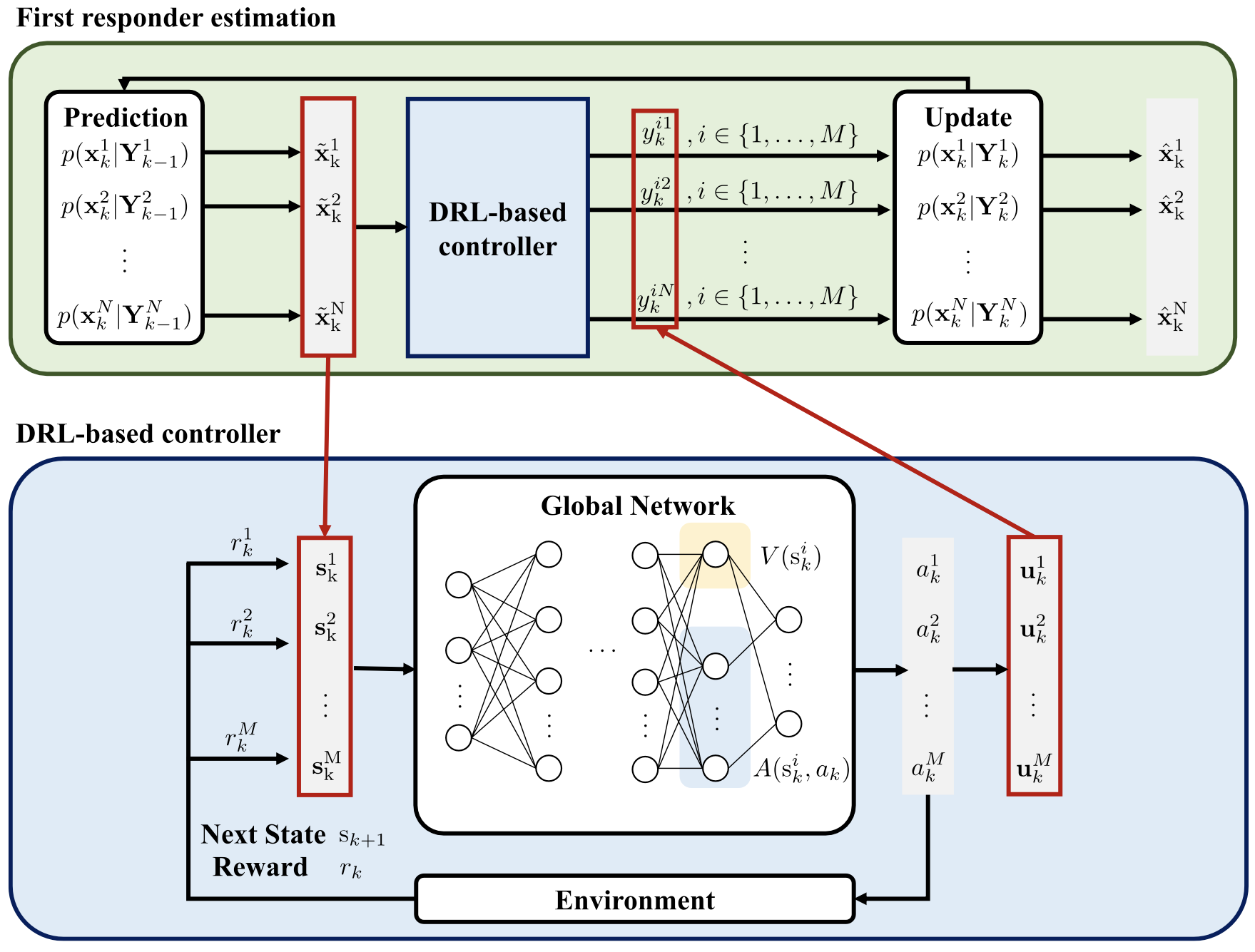

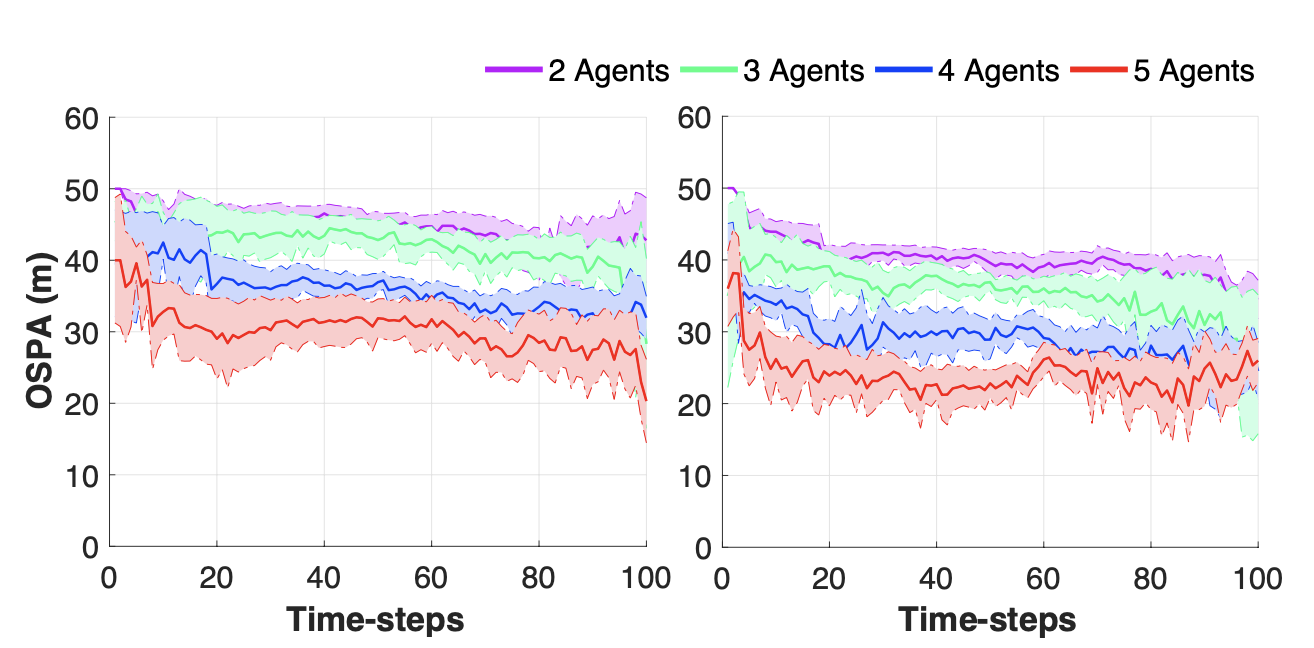

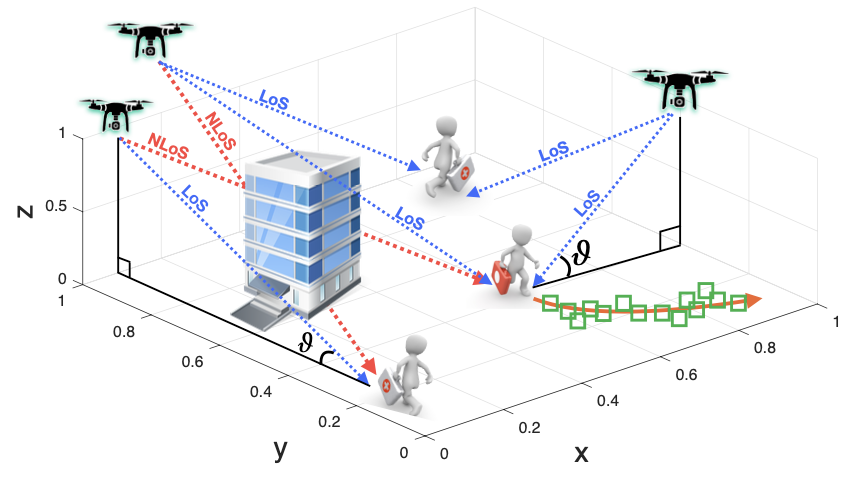

Deep Reinforcement Learning Multi-UAV Trajectory Control for Target TrackingJiseon Moon, Savvas Papaioannou, Christos Laoudias, Panayiotis Kolios, and Sunwoo KimIEEE Internet of Things Journal, 2021In this article, we propose a novel deep reinforcement learning (DRL) approach for controlling multiple unmanned aerial vehicles (UAVs) with the ultimate purpose of tracking multiple first responders (FRs) in challenging 3-D environments in the presence of obstacles and occlusions. We assume that the UAVs receive noisy distance measurements from the FRs which are of two types, i.e., Line of Sight (LoS) and non-LoS (NLoS) measurements and which are used by the UAV agents in order to estimate the state (i.e., position) of the FRs. Subsequently, the proposed DRL-based controller selects the optimal joint control actions according to the Cramér–Rao lower bound (CRLB) of the joint measurement likelihood function to achieve high tracking performance. Specifically, the optimal UAV control actions are quantified by the proposed reward function, which considers both the CRLB of the entire system and each UAV’s individual contribution to the system, called global reward and difference reward, respectively. Since the UAVs take actions that reduce the CRLB of the entire system, tracking accuracy is improved by ensuring the reception of high quality LoS measurements with high probability. Our simulation results show that the proposed DRL-based UAV controller provides a highly accurate target tracking solution with a very low runtime cost.

@article{9406813, author = {Moon, Jiseon and Papaioannou, Savvas and Laoudias, Christos and Kolios, Panayiotis and Kim, Sunwoo}, journal = {IEEE Internet of Things Journal}, title = {Deep Reinforcement Learning Multi-UAV Trajectory Control for Target Tracking}, year = {2021}, volume = {8}, number = {20}, pages = {15441-15455}, doi = {10.1109/JIOT.2021.3073973}, } -

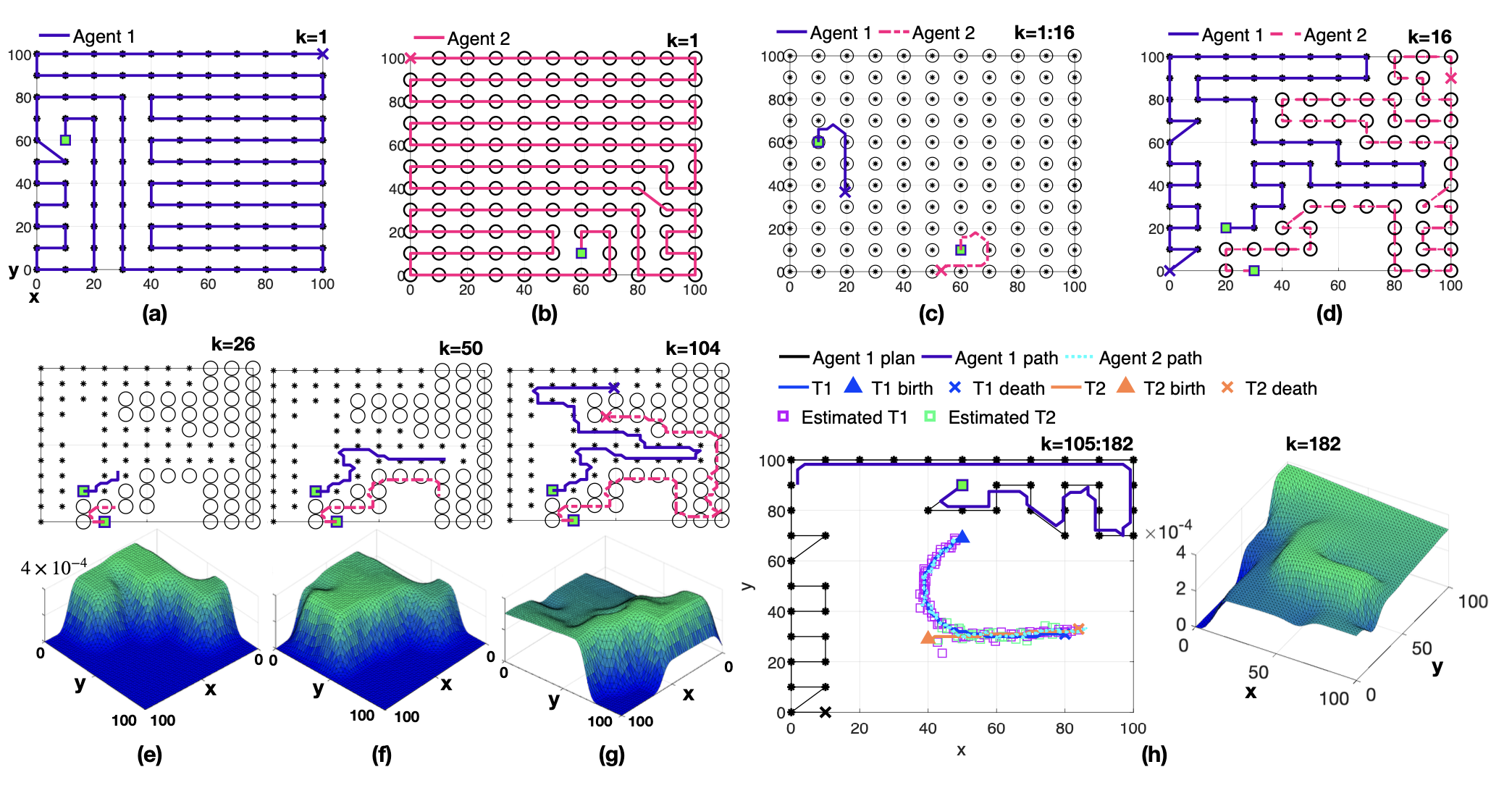

A Cooperative Multiagent Probabilistic Framework for Search and Track MissionsSavvas Papaioannou, Panayiotis Kolios, Theocharis Theocharides, Christos G. Panayiotou, and Marios M. PolycarpouIEEE Transactions on Control of Network Systems, 2021

A Cooperative Multiagent Probabilistic Framework for Search and Track MissionsSavvas Papaioannou, Panayiotis Kolios, Theocharis Theocharides, Christos G. Panayiotou, and Marios M. PolycarpouIEEE Transactions on Control of Network Systems, 2021In this work, a robust and scalable cooperative multiagent searching and tracking (SAT) framework is proposed. Specifically, we study the problem of cooperative SAT of multiple moving targets by a group of autonomous mobile agents with limited sensing capabilities. We assume that the actual number of targets present is not known a priori and that target births/deaths can occur anywhere inside the surveillance region; thus efficient search strategies are required to detect and track as many targets as possible. To address the aforementioned challenges, we recursively compute and propagate in time the SAT density. Using the SAT density, we then develop decentralized cooperative look-ahead strategies for efficient SAT of an unknown number of targets inside a bounded surveillance area.

@article{9262008, author = {Papaioannou, Savvas and Kolios, Panayiotis and Theocharides, Theocharis and Panayiotou, Christos G. and Polycarpou, Marios M.}, journal = {IEEE Transactions on Control of Network Systems}, title = {A Cooperative Multiagent Probabilistic Framework for Search and Track Missions}, year = {2021}, volume = {8}, number = {2}, pages = {847-858}, doi = {10.1109/TCNS.2020.3038843}, } -

Downing a Rogue Drone with a Team of Aerial Radio Signal JammersSavvas Papaioannou, Panayiotis Kolios, and Georgios EllinasIn 2021 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2021

Downing a Rogue Drone with a Team of Aerial Radio Signal JammersSavvas Papaioannou, Panayiotis Kolios, and Georgios EllinasIn 2021 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2021This work proposes a novel distributed control framework in which a team of pursuer agents equipped with a radio jamming device cooperate in order to track and radio-jam a rogue target in 3D space, with the ultimate purpose of disrupting its communication and navigation circuitry. The target evolves in 3D space according to a stochastic dynamical model and it can appear and disappear from the surveillance area at random times. The pursuer agents cooperate in order to estimate the probability of target existence and its spatial density from a set of noisy measurements in the presence of clutter. Additionally, the proposed control framework allows a team of pursuer agents to optimally choose their radio transmission levels and their mobility control actions in order to ensure uninterrupted radio jamming to the target, as well as to avoid the jamming interference among the team of pursuer agents. Extensive simulation analysis of the system’s performance validates the applicability of the proposed approach.

@inproceedings{9636065, author = {Papaioannou, Savvas and Kolios, Panayiotis and Ellinas, Georgios}, booktitle = {2021 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS)}, title = {Downing a Rogue Drone with a Team of Aerial Radio Signal Jammers}, year = {2021}, volume = {}, number = {}, pages = {2555-2562}, doi = {10.1109/IROS51168.2021.9636065}, } -

3D Trajectory Planning for UAV-based Search Missions: An Integrated Assessment and Search Planning ApproachSavvas Papaioannou, Panayiotis Kolios, Theocharis Theocharides, Christos G. Panayiotou, and Marios M. PolycarpouIn 2021 International Conference on Unmanned Aircraft Systems (ICUAS), 2021

3D Trajectory Planning for UAV-based Search Missions: An Integrated Assessment and Search Planning ApproachSavvas Papaioannou, Panayiotis Kolios, Theocharis Theocharides, Christos G. Panayiotou, and Marios M. PolycarpouIn 2021 International Conference on Unmanned Aircraft Systems (ICUAS), 2021The ability to efficiently plan and execute search missions in challenging and complex environments during natural and man-made disasters is imperative. In many emergency situations, precise navigation between obstacles and time-efficient searching around 3D structures is essential for finding survivors. In this work we propose an integrated assessment and search planning approach which allows an autonomous UAV (unmanned aerial vehicle) agent to plan and execute collision-free search trajectories in 3D environments. More specifically, the proposed search-planning framework aims to integrate and automate the first two phases (i.e., the assessment phase and the search phase) of a traditional search-and-rescue (SAR) mission. In the first stage, termed assessment-planning we aim to find a high-level assessment plan which the UAV agent can execute in order to visit a set of points of interest. The generated plan of this stage guides the UAV to fly over the objects of interest thus providing a first assessment of the situation at hand. In the second stage, termed search-planning, the UAV trajectory is further fine-tuned to allow the UAV to search in 3D (i.e., across all faces) the objects of interest for survivors. The performance of the proposed approach is demonstrated through extensive simulation analysis.

@inproceedings{9476869, author = {Papaioannou, Savvas and Kolios, Panayiotis and Theocharides, Theocharis and Panayiotou, Christos G. and Polycarpou, Marios M.}, booktitle = {2021 International Conference on Unmanned Aircraft Systems (ICUAS)}, title = {3D Trajectory Planning for UAV-based Search Missions: An Integrated Assessment and Search Planning Approach}, year = {2021}, volume = {}, number = {}, pages = {517-526}, doi = {10.1109/ICUAS51884.2021.9476869}, }

2020

-

Jointly-Optimized Searching and Tracking with Random Finite SetsSavvas Papaioannou, Panayiotis Kolios, Theocharis Theocharides, Christos G. Panayiotou, and Marios M. PolycarpouIEEE Transactions on Mobile Computing, 2020

Jointly-Optimized Searching and Tracking with Random Finite SetsSavvas Papaioannou, Panayiotis Kolios, Theocharis Theocharides, Christos G. Panayiotou, and Marios M. PolycarpouIEEE Transactions on Mobile Computing, 2020In this paper, we investigate the problem of joint searching and tracking of multiple mobile targets by a group of mobile agents. The targets appear and disappear at random times inside a surveillance region and their positions are random and unknown. The agents have limited sensing range and receive noisy measurements from the targets. A decision and control problem arises, where the mode of operation (i.e., search or track) as well as the mobility control action for each agent, at each time instance, must be determined so that the collective goal of searching and tracking is achieved. We build our approach upon the theory of random finite sets (RFS) and we use Bayesian multi-object stochastic filtering to simultaneously estimate the time-varying number of targets and their states from a sequence of noisy measurements. We formulate the above problem as a non-linear binary program (NLBP) and show that it can be approximated by a genetic algorithm. Finally, to study the effectiveness and performance of the proposed approach we have conducted extensive simulation experiments.

@article{8736404, author = {Papaioannou, Savvas and Kolios, Panayiotis and Theocharides, Theocharis and Panayiotou, Christos G. and Polycarpou, Marios M.}, journal = {IEEE Transactions on Mobile Computing}, title = {Jointly-Optimized Searching and Tracking with Random Finite Sets}, year = {2020}, volume = {19}, number = {10}, pages = {2374-2391}, doi = {10.1109/TMC.2019.2922133}, } -

Coordinated CRLB-based Control for Tracking Multiple First Responders in 3D EnvironmentsSavvas Papaioannou, Sungjin Kim, Christos Laoudias, Panayiotis Kolios, Sunwoo Kim, Theocharis Theocharides, and 2 more authorsIn 2020 International Conference on Unmanned Aircraft Systems (ICUAS), 2020

Coordinated CRLB-based Control for Tracking Multiple First Responders in 3D EnvironmentsSavvas Papaioannou, Sungjin Kim, Christos Laoudias, Panayiotis Kolios, Sunwoo Kim, Theocharis Theocharides, and 2 more authorsIn 2020 International Conference on Unmanned Aircraft Systems (ICUAS), 2020In this paper we study the problem of tracking a team of first responders with a fleet of autonomous mobile flying agents, operating in 3D environments. We assume that the first responders exhibit stochastic dynamics and evolve inside challenging environments with obstacles and occlusions. As a result, the mobile agents probabilistically receive noisy line-of-sight (LoS), as well as non-line-of-sight (NLoS) range measurements from the first responders. In this work, we propose a novel estimation (i.e., estimating the position of multiple first responders over time) and control (i.e., controlling the movement of the agents) framework based on the Cramér-Rao lower bound (CRLB). More specifically, we analytically derive the CRLB of the measurement likelihood function which we use as a control criterion to select the optimal joint control actions over all agents, thus achieving optimized tracking performance. The effectiveness of the proposed multi-agent multi-target estimation and control framework is demonstrated through an extensive simulation analysis.

@inproceedings{9213937, author = {Papaioannou, Savvas and Kim, Sungjin and Laoudias, Christos and Kolios, Panayiotis and Kim, Sunwoo and Theocharides, Theocharis and Panayiotou, Christos and Polycarpou, Marios}, booktitle = {2020 International Conference on Unmanned Aircraft Systems (ICUAS)}, title = {Coordinated CRLB-based Control for Tracking Multiple First Responders in 3D Environments}, year = {2020}, volume = {}, number = {}, pages = {1475-1484}, doi = {10.1109/ICUAS48674.2020.9213937}, } -

Multi-Agent Coordinated Interception of Multiple Rogue DronesPanayiota Valianti, Savvas Papaioannou, Panayiotis Kolios, and Georgios EllinasIn 2020 IEEE Global Communications Conference (GLOBECOM), 2020

Multi-Agent Coordinated Interception of Multiple Rogue DronesPanayiota Valianti, Savvas Papaioannou, Panayiotis Kolios, and Georgios EllinasIn 2020 IEEE Global Communications Conference (GLOBECOM), 2020Over the last few years there has been an unprecedented interest in unmanned aerial vehicles (UAVs). However, drones potentially pose great threats to security and public safety, especially when their malicious use involves critical infrastructures and public spaces. This work proposes a multiagent counter-drone system where a team of pursuer drones cooperate in order to track and jam multiple rogue drones. Specifically, a cooperative multi-agent approach is proposed in which the best joint mobility and power control actions of each agent are chosen so that the rogue drones are optimally tracked and jammed over time. Two variants of the joint optimization problem are developed and extensive simulations are conducted so as to evaluate the performance of the proposed approach.

@inproceedings{9322489, author = {Valianti, Panayiota and Papaioannou, Savvas and Kolios, Panayiotis and Ellinas, Georgios}, booktitle = {2020 IEEE Global Communications Conference (GLOBECOM)}, title = { Multi-Agent Coordinated Interception of Multiple Rogue Drones}, year = {2020}, volume = {}, number = {}, pages = {1-6}, doi = {10.1109/GLOBECOM42002.2020.9322489}, } -

Cooperative Simultaneous Tracking and Jamming for Disabling a Rogue DroneSavvas Papaioannou, Panayiotis Kolios, Christos G. Panayiotou, and Marios M. PolycarpouIn 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2020

Cooperative Simultaneous Tracking and Jamming for Disabling a Rogue DroneSavvas Papaioannou, Panayiotis Kolios, Christos G. Panayiotou, and Marios M. PolycarpouIn 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2020This work investigates the problem of simultaneous tracking and jamming of a rogue drone in 3D space with a team of cooperative unmanned aerial vehicles (UAVs). We propose a decentralized estimation, decision and control framework in which a team of UAVs cooperate in order to a) optimally choose their mobility control actions that result in accurate target tracking and b) select the desired transmit power levels which cause uninterrupted radio jamming and thus ultimately disrupt the operation of the rogue drone. The proposed decision and control framework allows the UAVs to reconfigure themselves in 3D space such that the cooperative simultaneous tracking and jamming (CSTJ) objective is achieved; while at the same time ensures that the unwanted inter-UAV jamming interference caused during CSTJ is kept below a specified critical threshold. Finally, we formulate this problem under challenging conditions i.e., uncertain dynamics, noisy measurements and false alarms. Extensive simulation experiments illustrate the performance of the proposed approach

@inproceedings{9340835, author = {Papaioannou, Savvas and Kolios, Panayiotis and Panayiotou, Christos G. and Polycarpou, Marios M.}, booktitle = {2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS)}, title = {Cooperative Simultaneous Tracking and Jamming for Disabling a Rogue Drone}, year = {2020}, volume = {}, number = {}, pages = {7919-7926}, doi = {10.1109/IROS45743.2020.9340835}, }

2019

-

Decentralized Search and Track with Multiple Autonomous AgentsSavvas Papaioannou, Panayiotis Kolios, Theocharis Theocharides, Christos G. Panayiotou, and Marios M. PolycarpouIn 2019 IEEE 58th Conference on Decision and Control (CDC), 2019

Decentralized Search and Track with Multiple Autonomous AgentsSavvas Papaioannou, Panayiotis Kolios, Theocharis Theocharides, Christos G. Panayiotou, and Marios M. PolycarpouIn 2019 IEEE 58th Conference on Decision and Control (CDC), 2019In this paper we study the problem of cooperative searching and tracking (SAT) of multiple moving targets with a group of autonomous mobile agents that exhibit limited sensing capabilities. We assume that the actual number of targets is not known a priori and that target births/deaths can occur anywhere inside the surveillance region. For this reason efficient search strategies are required to detect and track as many targets as possible. To address the aforementioned challenges we augment the classical Probability Hypothesis Density (PHD) filter with the ability to propagate in time the search density in addition to the target density. Based on this, we develop decentralized cooperative look-ahead strategies for efficient searching and tracking of an unknown number of targets inside a bounded surveillance area. The performance of the proposed approach is demonstrated through simulation experiments.

@inproceedings{9029236, author = {Papaioannou, Savvas and Kolios, Panayiotis and Theocharides, Theocharis and Panayiotou, Christos G. and Polycarpou, Marios M.}, booktitle = {2019 IEEE 58th Conference on Decision and Control (CDC)}, title = {Decentralized Search and Track with Multiple Autonomous Agents}, year = {2019}, volume = {}, number = {}, pages = {909-915}, doi = {10.1109/CDC40024.2019.9029236}, } -

Probabilistic Search and Track with Multiple Mobile AgentsSavvas Papaioannou, Panayiotis Kolios, Theocharis Theocharides, Christos G. Panayiotou, and Marios M. PolycarpouIn 2019 International Conference on Unmanned Aircraft Systems (ICUAS), 2019

Probabilistic Search and Track with Multiple Mobile AgentsSavvas Papaioannou, Panayiotis Kolios, Theocharis Theocharides, Christos G. Panayiotou, and Marios M. PolycarpouIn 2019 International Conference on Unmanned Aircraft Systems (ICUAS), 2019In this paper we are interested in the task of searching and tracking multiple moving targets in a bounded surveillance area with a group of autonomous mobile agents. More specifically, we assume that targets can appear and disappear at random times inside the surveillance region and their positions are random and unknown. The agents have a limited sensing range, and due to sensor imperfections they receive noisy measurements from the targets. In this work we utilize the theory of random finite sets (RFS) to capture the uncertainty in the time-varying number of targets and their states and we propose a decision and control framework, in which the mode of operation (i.e. search or track) as well as the mobility control action for each agent, at each time instance, are determined so that the collective goal of searching and tracking is achieved. Extensive simulation results demonstrate the effectiveness and performance of the proposed solution.

@inproceedings{8797831, author = {Papaioannou, Savvas and Kolios, Panayiotis and Theocharides, Theocharis and Panayiotou, Christos G. and Polycarpou, Marios M.}, booktitle = {2019 International Conference on Unmanned Aircraft Systems (ICUAS)}, title = {Probabilistic Search and Track with Multiple Mobile Agents}, year = {2019}, volume = {}, number = {}, pages = {253-262}, doi = {10.1109/ICUAS.2019.8797831}, }

2017

-

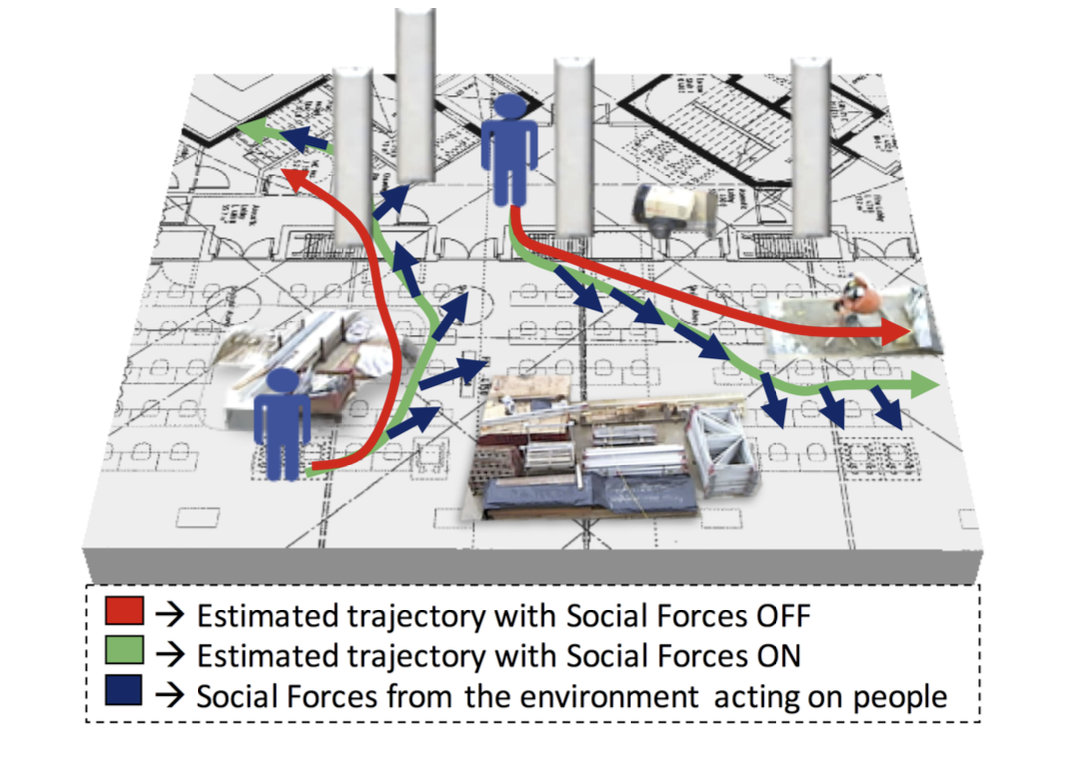

Tracking People in Highly Dynamic Industrial EnvironmentsSavvas Papaioannou, Andrew Markham, and Niki TrigoniIEEE Transactions on Mobile Computing, 2017

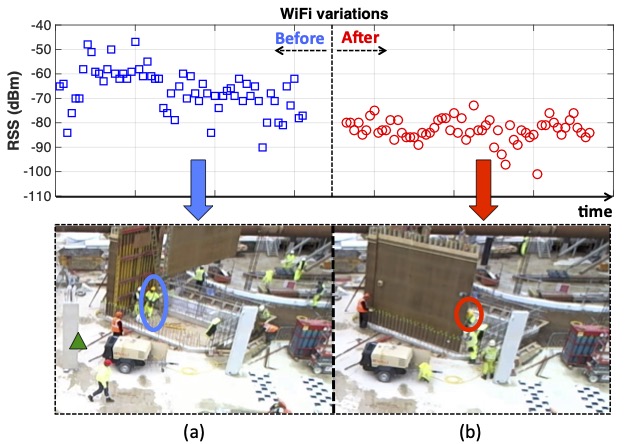

Tracking People in Highly Dynamic Industrial EnvironmentsSavvas Papaioannou, Andrew Markham, and Niki TrigoniIEEE Transactions on Mobile Computing, 2017To date, the majority of positioning systems have been designed to operate within environments that have a long-term stable macro-structure with potential small-scale dynamics. These assumptions allow the existing positioning systems to produce and utilize stable maps. However, in highly dynamic industrial settings these assumptions are no longer valid and the task of tracking people is more challenging due to the rapid large-scale changes in structure. In this paper, we propose a novel positioning system for tracking people in highly dynamic industrial environments, such as construction sites. The proposed system leverages the existing CCTV camera infrastructure found in many industrial settings along with radio and inertial sensors within each worker’s mobile phone to accurately track multiple people. This multi-target multi-sensor tracking framework also allows our system to use cross-modality training in order to deal with the environment dynamics. In particular, we show how our system uses cross-modality training in order to automatically keep track environmental changes (i.e., new walls) by utilizing occlusion maps. In addition, we show how these maps can be used in conjunction with social forces to accurately predict human motion and increase the tracking accuracy. We have conducted extensive real-world experiments in a construction site showing significant accuracy improvement via cross-modality training and the use of social forces.

@article{7576696, author = {Papaioannou, Savvas and Markham, Andrew and Trigoni, Niki}, journal = {IEEE Transactions on Mobile Computing}, title = {Tracking People in Highly Dynamic Industrial Environments}, year = {2017}, volume = {16}, number = {8}, pages = {2351-2365}, doi = {10.1109/TMC.2016.2613523}, }

2016

-

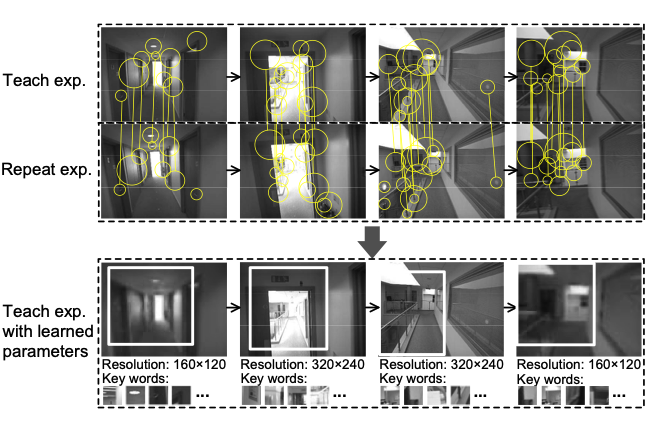

Poster Abstract: Efficient Visual Positioning with Adaptive Parameter LearningHongkai Wen, Sen Wang, Ronnie Clark, Savvas Papaioannou, and Niki TrigoniIn 2016 15th ACM/IEEE International Conference on Information Processing in Sensor Networks (IPSN), 2016

Poster Abstract: Efficient Visual Positioning with Adaptive Parameter LearningHongkai Wen, Sen Wang, Ronnie Clark, Savvas Papaioannou, and Niki TrigoniIn 2016 15th ACM/IEEE International Conference on Information Processing in Sensor Networks (IPSN), 2016Positioning with vision sensors is gaining its popularity, since it is more accurate, and requires much less bootstrapping and training effort. However, one of the major limitations of the existing solutions is the expensive visual processing pipeline: on resource-constrained mobile devices, it could take up to tens of seconds to process one frame. To address this, we propose a novel learning algorithm, which adaptively discovers the place dependent parameters for visual processing, such as which parts of the scene are more informative, and what kind of visual elements one would expect, as it is employed more and more by the users in a particular setting. With such meta-information, our positioning system dynamically adjust its behaviour, to localise the users with minimum effort. Preliminary results show that the proposed algorithm can reduce the cost on visual processing significantly, and achieve sub-metre positioning accuracy.

@inproceedings{IPSN2016, author = {Wen, Hongkai and Wang, Sen and Clark, Ronnie and Papaioannou, Savvas and Trigoni, Niki}, booktitle = {2016 15th ACM/IEEE International Conference on Information Processing in Sensor Networks (IPSN)}, title = {Poster Abstract: Efficient Visual Positioning with Adaptive Parameter Learning}, year = {2016}, pages = {1-2}, doi = {10.1109/IPSN.2016.7460701}, }

2015

-

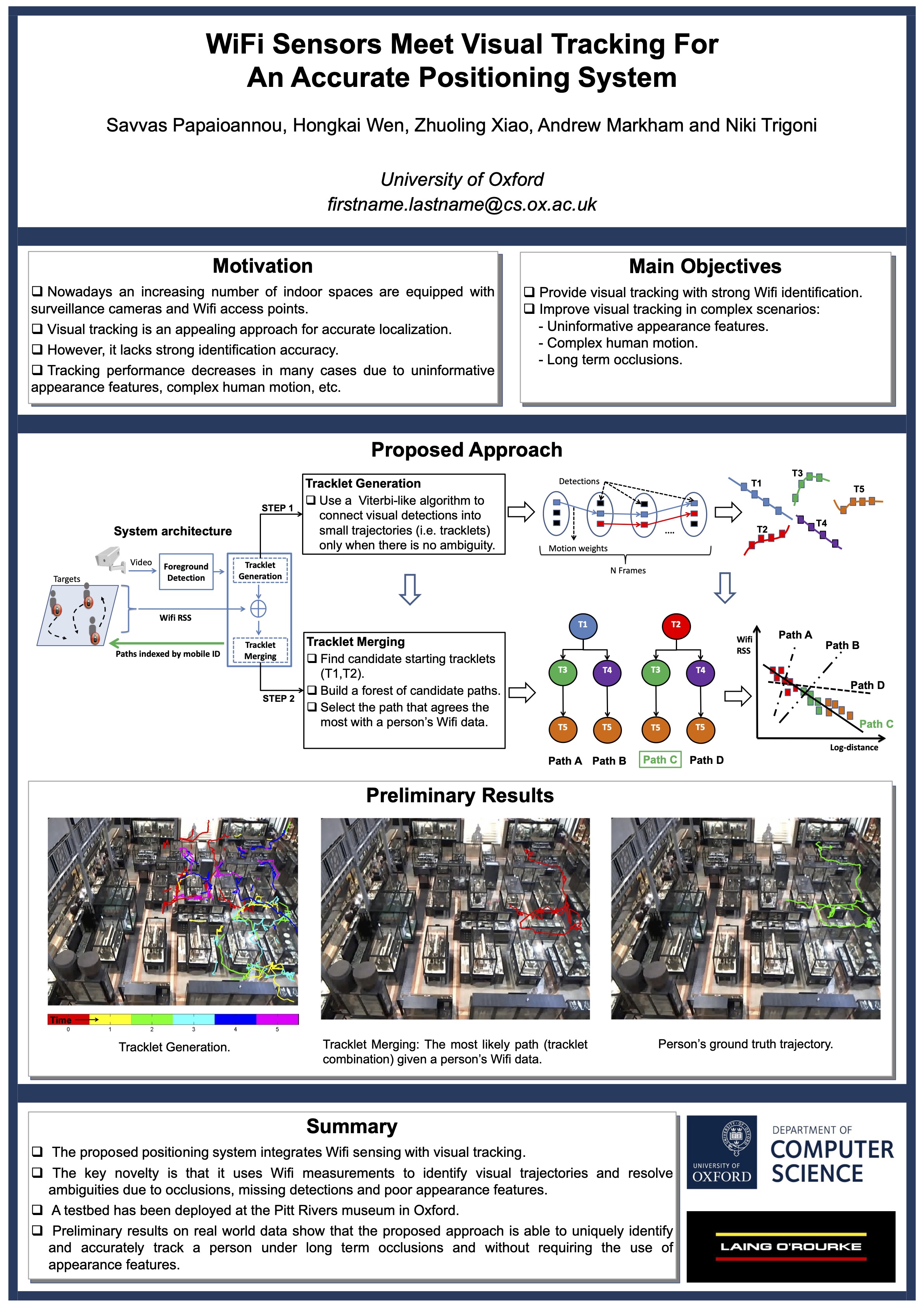

Accurate Positioning via Cross-Modality TrainingSavvas Papaioannou, Hongkai Wen, Zhuoling Xiao, Andrew Markham, and Niki TrigoniIn Proceedings of the 13th ACM Conference on Embedded Networked Sensor Systems (SenSys), Seoul, South Korea, 2015

Accurate Positioning via Cross-Modality TrainingSavvas Papaioannou, Hongkai Wen, Zhuoling Xiao, Andrew Markham, and Niki TrigoniIn Proceedings of the 13th ACM Conference on Embedded Networked Sensor Systems (SenSys), Seoul, South Korea, 2015In this paper we propose a novel algorithm for tracking people in highly dynamic industrial settings, such as construction sites. We observed both short term and long term changes in the environment; people were allowed to walk in different parts of the site on different days, the field of view of fixed cameras changed over time with the addition of walls, whereas radio and magnetic maps proved unstable with the movement of large structures. To make things worse, the uniforms and helmets that people wear for safety make them very hard to distinguish visually, necessitating the use of additional sensor modalities. In order to address these challenges, we designed a positioning system that uses both anonymous and id-linked sensor measurements and explores the use of cross-modality training to deal with environment dynamics. The system is evaluated in a real construction site and is shown to outperform state of the art multi-target tracking algorithms designed to operate in relatively stable environments.

@inproceedings{10.1145/2809695.2809712, author = {Papaioannou, Savvas and Wen, Hongkai and Xiao, Zhuoling and Markham, Andrew and Trigoni, Niki}, title = {Accurate Positioning via Cross-Modality Training}, year = {2015}, isbn = {9781450336314}, publisher = {Association for Computing Machinery}, address = {New York, NY, USA}, url = {https://doi.org/10.1145/2809695.2809712}, doi = {10.1145/2809695.2809712}, booktitle = {Proceedings of the 13th ACM Conference on Embedded Networked Sensor Systems (SenSys)}, pages = {239–251}, numpages = {13}, keywords = {wireless sensor networks, tracking}, location = {Seoul, South Korea}, series = {SenSys '15}, } -

Opportunistic Radio Assisted Navigation for Autonomous Ground VehiclesHongkai Wen, Yiran Shen, Savvas Papaioannou, Winston Churchill, Niki Trigoni, and Paul NewmanIn 2015 International Conference on Distributed Computing in Sensor Systems (DCOSS), 2015

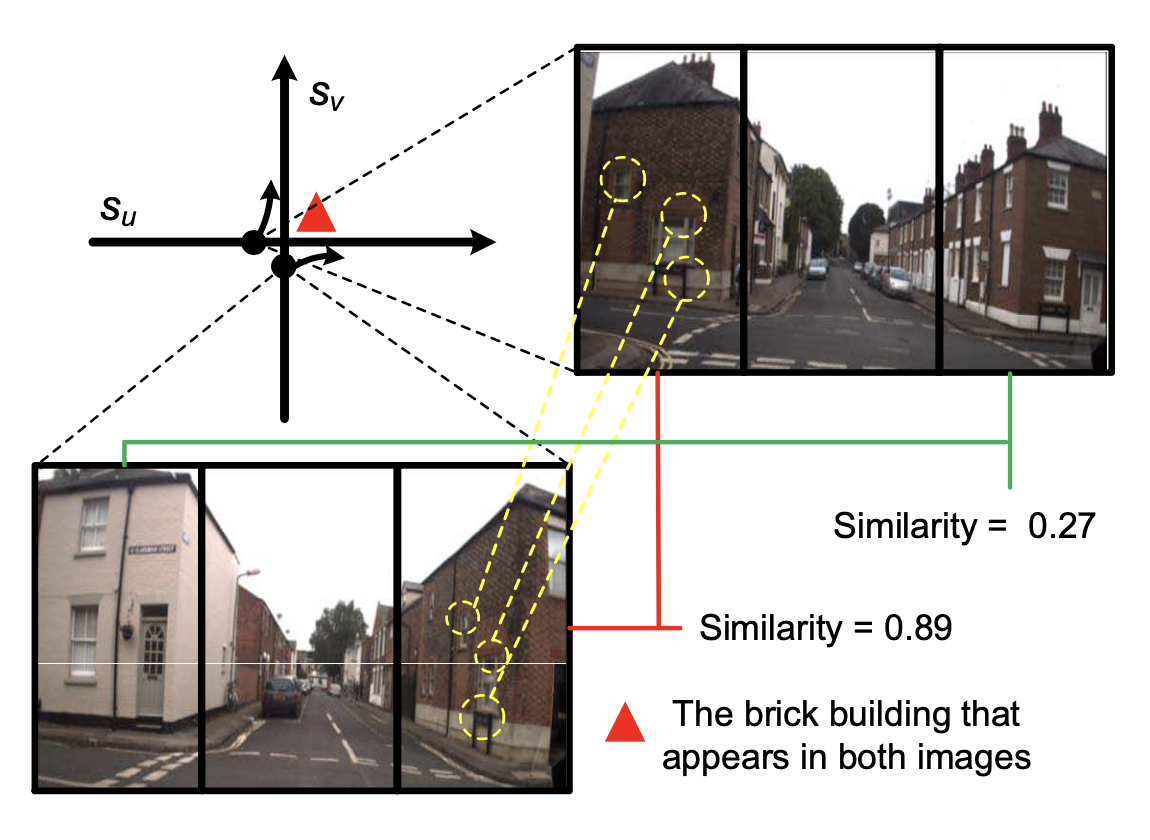

Opportunistic Radio Assisted Navigation for Autonomous Ground VehiclesHongkai Wen, Yiran Shen, Savvas Papaioannou, Winston Churchill, Niki Trigoni, and Paul NewmanIn 2015 International Conference on Distributed Computing in Sensor Systems (DCOSS), 2015Navigating autonomous ground vehicles with visual sensors has many advantages - it does not rely on global maps, yet is accurate and reliable even in GPS-denied environments. However, due to the limitation of the camera field of view, one typically has to record a large number of visual experiences for practical navigation. In this paper, we explore new avenues in linking together visual experiences, by opportunistically harvesting and sharing a variety of radio signals emitted by surrounding stationary access points and mobile devices. We propose a novel navigation approach, which exploits side-channel information of co-location to thread up visually-separated experiences with short exploration phases. The proposed approach empowers users to trade travel time for manual navigation effort, allowing them to choose the itinerary that best serves their needs. We evaluate the proposed approach with data collected from a typical urban area, and show that it achieves much better navigation performance in both reach ability and cost, comparing with the state of the arts that only use visual information.

@inproceedings{7165020, author = {Wen, Hongkai and Shen, Yiran and Papaioannou, Savvas and Churchill, Winston and Trigoni, Niki and Newman, Paul}, booktitle = {2015 International Conference on Distributed Computing in Sensor Systems (DCOSS)}, title = {Opportunistic Radio Assisted Navigation for Autonomous Ground Vehicles}, year = {2015}, volume = {}, number = {}, pages = {21-30}, doi = {10.1109/DCOSS.2015.22}, }

2014

-

Fusion of Radio and Camera Sensor Data for Accurate Indoor PositioningSavvas Papaioannou, Hongkai Wen, Andrew Markham, and Niki TrigoniIn 2014 IEEE 11th International Conference on Mobile Ad Hoc and Sensor Systems (MASS), 2014

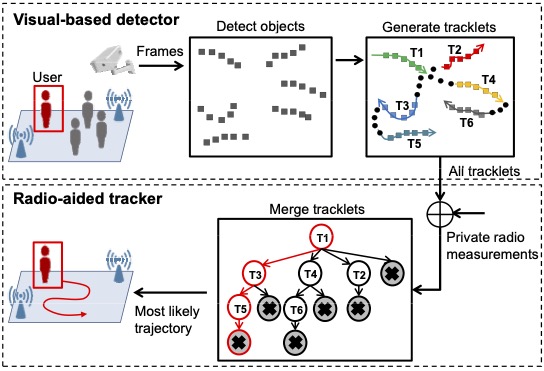

Fusion of Radio and Camera Sensor Data for Accurate Indoor PositioningSavvas Papaioannou, Hongkai Wen, Andrew Markham, and Niki TrigoniIn 2014 IEEE 11th International Conference on Mobile Ad Hoc and Sensor Systems (MASS), 2014Indoor positioning systems have received a lot of attention recently due to their importance for many location-based services, e.g. indoor navigation and smart buildings. Lightweight solutions based on WiFi and inertial sensing have gained popularity, but are not fit for demanding applications, such as expert museum guides and industrial settings, which typically require sub-meter location information. In this paper, we propose a novel positioning system, RAVEL (Radio And Vision Enhanced Localization), which fuses anonymous visual detections captured by widely available camera infrastructure, with radio readings (e.g. WiFi radio data). Although visual trackers can provide excellent positioning accuracy, they are plagued by issues such as occlusions and people entering/exiting the scene, preventing their use as a robust tracking solution. By incorporating radio measurements, visually ambiguous or missing data can be resolved through multi-hypothesis tracking. We evaluate our system in a complex museum environment with dim lighting and multiple people moving around in a space cluttered with exhibit stands. Our experiments show that although the WiFi measurements are not by themselves sufficiently accurate, when they are fused with camera data, they become a catalyst for pulling together ambiguous, fragmented, and anonymous visual tracklets into accurate and continuous paths, yielding typical errors below 1 meter.